Azure vs AWS vs GCP (Part 2: Form Recognizers)

- Form recognizers use artificial intelligence to extract data from digital or handwritten custom forms, invoices, tables and receipts.

- We compared the form recognizers solutions on Amazon, Google and Microsoft Cloud.

- Azure Form Recognizer does a fantastic job in creating a viable solution with just five sample documents. It performs end-to-end Optical Character Recognition (OCR) on handwritten as well as digital documents with an amazing accuracy score and in just three seconds.

In part 1, we compared handwriting recognition solutions on Azure, AWS and GCP. In this post, we will be comparing form recognizer capabilities.

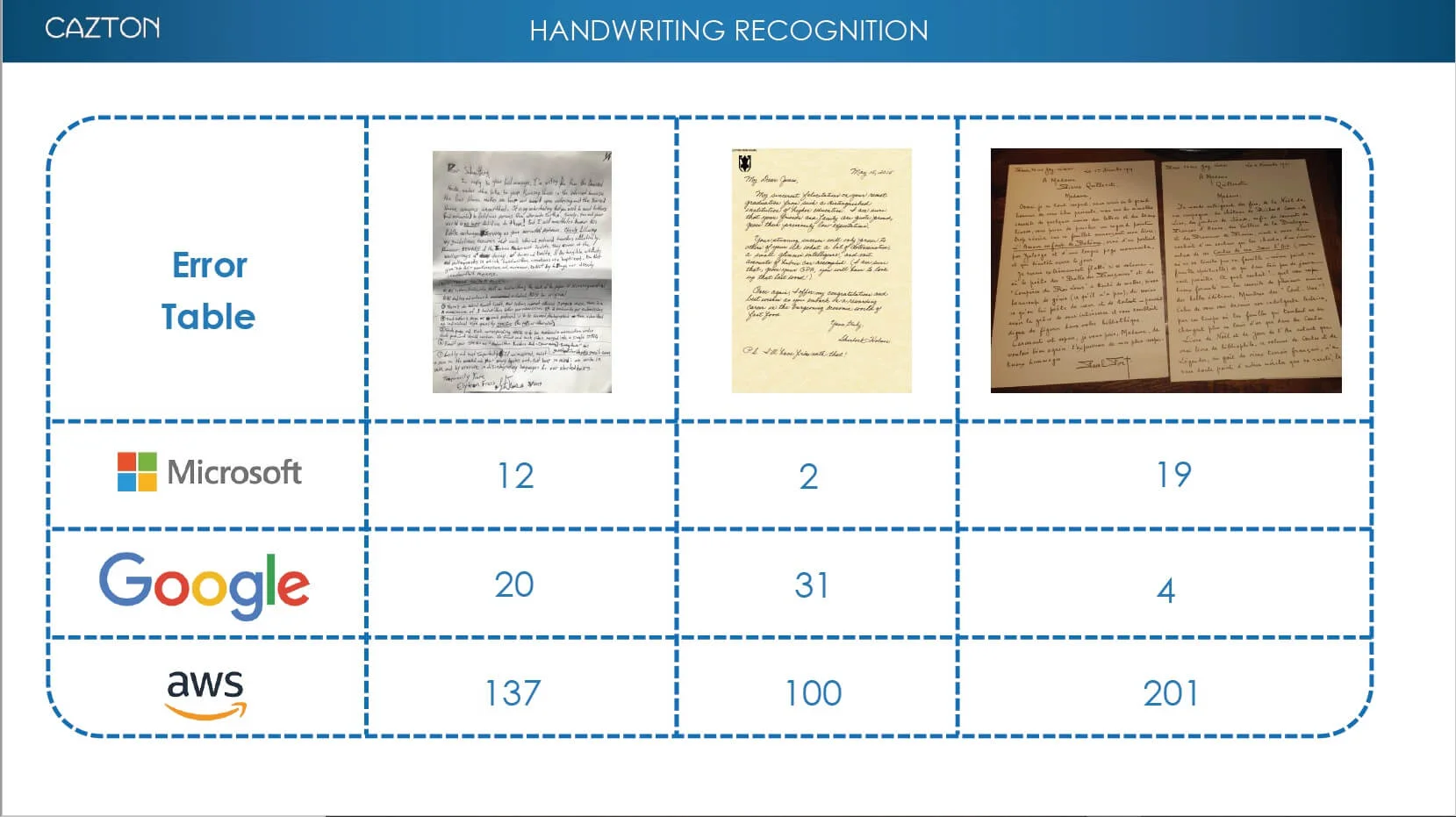

(Part 1: Handwriting Recognition)

Data is more expensive than oil now. Companies have a lot of data, but not all data is digitized. Recently, while consulting for a client, we realized that they had been doing big data analysis manually. It was shocking. They had more than a hundred year's worth of handwritten documents. Imagine, doing an analysis on that amount of data. We built a solution for them that not only recognizes the standard forms like invoices, receipts etc., but also converts the handwritten texts into digitized text. Once the data is in the right form, analysis becomes easy. To manage, analyse, make predictions and decisions using that data is a daunting task, especially if the data is not collected using the right process. To understand the high-level process, please read the following article. Even though these solutions can run on-premise, in this article we will compare the available cloud offerings for recognizing form data in the cloud.

There are lots of ways to reduce that pile of documents in your shelf or cupboard and store them digitally in a hard disk or in cloud storage. There are services available, which can read the information present in your forms, recognize and extract that information while preserving their relationship within the data. These services use artificial intelligence and machine learning algorithms to extract text from different document formats. These allow you to easily search through your information, apply data science and make predictions based on the data input and, lastly but also importantly, reduce paper use.

In this blog, we will examine:

- Custom labeling model comparison.

- Invoice recognition model comparison

- Receipt recognition model comparison.

- Application Form recognition model comparison.

- Table data extraction (from images) model comparison.

Custom labeling model comparison

Each business case requires unique modifications specific to its purpose. Behind the scenes, each model inspects the training data from the cloud, selects the right machine learning algorithm, trains and provides a custom model and evaluation metrics. This new model can now be used through the API and integrated into the applications. They all utilize bounding boxes to tag a specific area to extract the information.

What is a bounding box?

A bounding box is a rectangular box with x and y coordinates of the upper left and lower right corners and is used to find the exact location of an element on a page. Bounding boxes are typically used for multiple object recognition by forming a rectangular box around the object to be retrieved.

Why do we need bounding boxes?

In order to replicate the structure of a document, invoice, form or receipt, we need to know the exact coordinates of all the elements of interest. For example, in a W-4 employment form, an OCR program is capable of finding out all digitized text. However, a form recognizer, uses OCR to retrieve digitized texts and bounding boxes to retrieve where the particular text is located. The x and y coordinates of the bounding boxes of fields like name, social security number and address provide the necessary relative locations of these fields. This helps us reconstruct the document on a custom user interface created by us that looks exactly like the scanned form which is our input. The results are kept in a set of key-value pairs. That makes it perfect to be stored in a data store. For example, the fields above are the keys. All keys are part of the standard form. The values are either typed or handwritten.

We are using the following tools and their UI implementations (if available) on their corresponding websites:

Amazon Rekognition Custom Label: It can be used to identify objects and scenes in images that are specific to business needs. For example, it can identify logos, identify products on store shelves, identify animated characters in videos, etc. Bounding boxes here are specified using all four vertices of the rectangular box along with the width and height.

Google Cloud Auto ML Vision Object Detection: It uses custom machine learning models that can detect individual objects in each image along with its bounding box and label. Bounding boxes are specified using the top-left and bottom-right vertices.

Microsoft Form Recognition: The form recognizer uses unsupervised machine learning algorithms to identify and extract text, key/value pairs and table data from form documents. It uses pre-trained models to output structured data such as the time and date of transactions, merchant information, amount of taxes or total. This helps preserve the relationships in the original form document. It can also be applied for custom forms by using bounding boxes and labeling to train and test on different documents. Bounding boxes here are specified using all four vertices of the rectangular box.

We are testing these models based on how well they can find the bounding boxes specific to a business use case. The use case here is extracting the information from the W2 form images. Here we will be comparing custom labeling implementation of Amazon, Google and Microsoft for sample sizes of five and ten W2 forms. We will keep one form separate from the training set for testing purposes. Tags considered (in these approximate positions in W2 form):

Full Report

- Simple queries.

- Custom Label Model Comparison.

- Invoice Recognition Model Comparison.

- Receipt Recognition Model Comparison.

- Application Form Recognition Model Comparison.

- Table Data Extraction (From Images) Model Comparison.