PostGres Consulting

- Lower cost without sacrificing performance: PostgreSQL offers significantly lower total cost of ownership compared to commercial databases, making it a smart choice for budget-conscious leaders who still demand enterprise-grade performance.

- Get performance that rivals commercial alternatives: We fine-tune PostgreSQL for your specific workloads using advanced features like parallel processing, smart indexing, and query optimization to deliver performance on par with or better than proprietary databases.

- Scale with your business growth: We design and optimize PostgreSQL environments to handle billions of records, high-throughput workloads, and petabyte-scale data growth, ensuring performance and stability as your business expands.

- Secure your data with enterprise-grade protection: We implement role-based access controls, encryption in transit and at rest, and compliance-ready configurations tailored for regulated industries.

- Integrate without any downtime: Our team builds PostgreSQL solutions that align with your APIs, data pipelines, and enterprise applications without interrupting operations.

- Modernize with cloud-native PostgreSQL deployments: Our cloud-native solutions across AWS, Azure, and GCP optimize PostgreSQL for scalability, cost-efficiency, and DevOps automation.

- Top clients: We help Fortune 500, large, mid-size and startup companies with Big Data and AI development, deployment (MLOps), consulting, recruiting services and hands-on training services. Our clients include Microsoft, Google, Broadcom, Thomson Reuters, Bank of America, Macquarie, Dell and more.

Businesses everywhere are redefining success by the quality, speed and trustworthiness of their data. New regulations, unpredictable markets and rising user expectations mean your data platform is more than an IT concern-it's the cornerstone of business delivery. Yet, too often, organizations are held back not by their ambition, but by the technical friction and cost of legacy data systems.

That's why modern enterprises are turning to PostgreSQL (Postgres), a database platform that does far more than just store data. When properly implemented, Postgres becomes a force-multiplier for growth, efficiency and resilience, giving your team the confidence and agility to act. However, the path to successful Postgres adoption isn't as simple as installing open-source software.

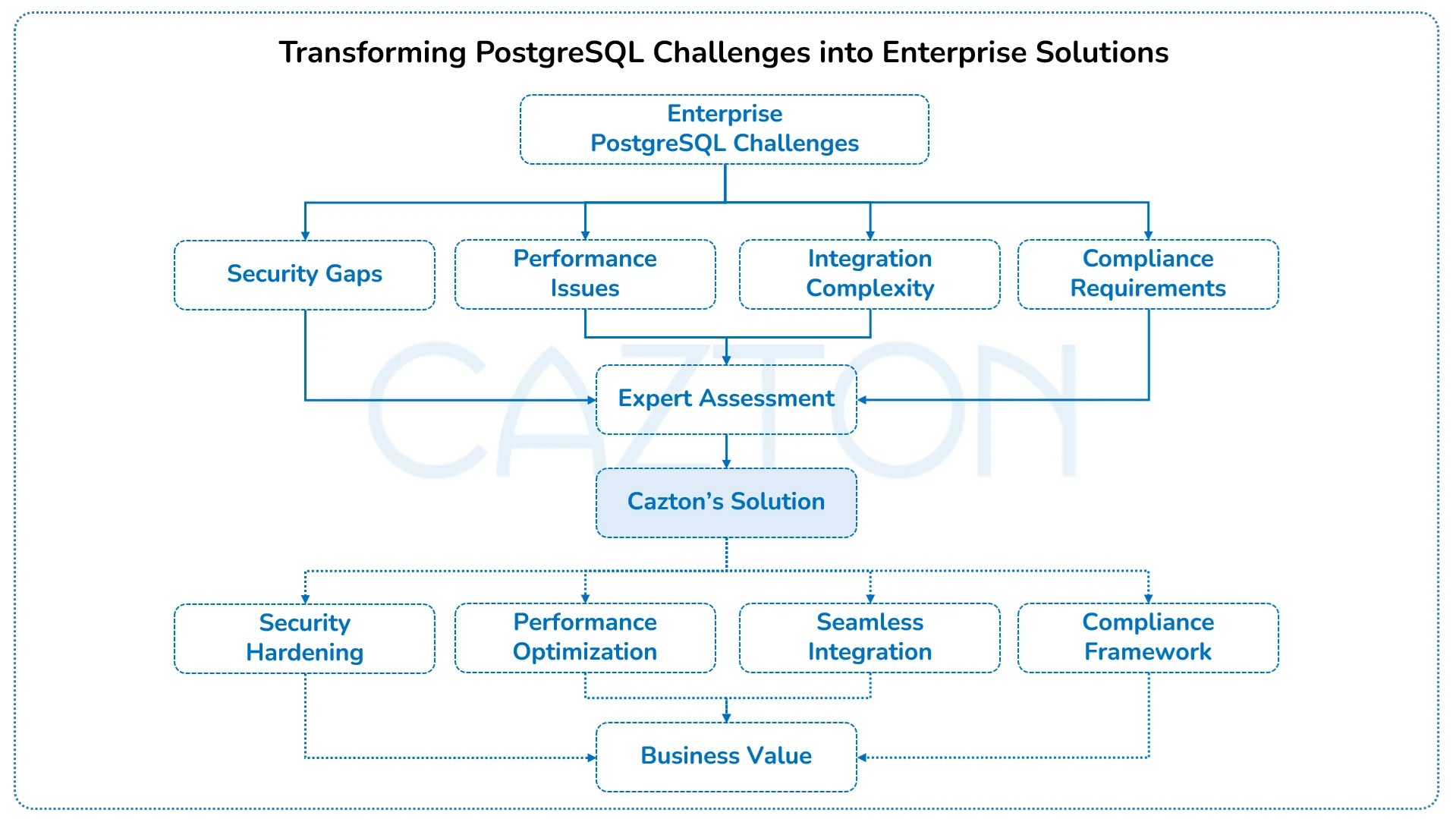

The difference between a successful PostgreSQL transformation and a costly migration failure lies in strategic implementation. Our team brings deep expertise in enterprise PostgreSQL deployments, ensuring your organization captures the full business value while avoiding the common pitfalls that derail database modernization projects.

Why Leading Companies Are Modernizing Their Data Infrastructure

Business leaders are facing a perfect storm: ballooning data volumes, rising compliance pressure, and the urgent need for real-time analytics. In this environment, the right data platform is no longer a backend decision; it’s foundational to operational excellence and long-term competitive advantage.

As enterprises transition away from proprietary systems, many are caught off guard by spiraling license fees, upgrade friction, and the complexities of cloud migration. Too often, rushed rollouts or “DIY” migrations introduce unnecessary risk, degrade trust in data systems, or stall digital progress.

PostgreSQL changes the equation. It offers proven scalability, governance, automation, and flexibility, all within an open, standards-based ecosystem. But what separates successful adopters isn’t just the technology; it’s how they approach the transition.

How we help turning PostgreSQL adoption into a strategic advantage

Our approach aligns technical execution with business priorities, balancing deep PostgreSQL expertise with organizational enablement. We make sure your migration, integration, and operations are:

- Predictable: with clear roadmaps, phased execution, and stakeholder buy-in

- Cost-effective: reducing reliance on expensive licenses and legacy infrastructure

- Future-proofed: with embedded readiness for AI, real-time analytics, and compliance demands

PostgreSQL adoption is accelerating because forward-thinking enterprises recognize its value, not just as a database, but as a platform for innovation. With the right strategy and the right partner, it becomes a powerful catalyst for sustainable growth.

A Database That Meets Enterprise Demands

PostgreSQL has evolved into a trusted, enterprise-grade database platform; one that combines the cost-efficiency of open source with the resilience, extensibility, and performance needed for real-world, large-scale operations. For business and technical leaders alike, understanding why PostgreSQL is accelerating in enterprise adoption means looking beyond features and focusing on outcomes.

What makes PostgreSQL enterprise-ready

At its core, PostgreSQL is an object-relational database that supports both SQL and JSON queries, making it ideal for hybrid data models. But its real power comes from how its modular components work together:

- Data reliability: ACID compliance and write-ahead logging ensure transactional integrity and fault tolerance.

- High availability: Built-in replication, Multi-Version Concurrency Control (MVCC), and automated failover support continuous operations without downtime.

- Extensibility: Open APIs and pluggable extensions support everything from time-series analysis and full-text search to machine learning and geospatial workloads.

- Performance at scale: A sophisticated query planner and concurrent processing capabilities allow PostgreSQL to support demanding transactional and analytical workloads.

These capabilities aren’t theoretical. In practice, we've helped financial firms modernize legacy systems using PostgreSQL’s JSON features for flexible data modeling, while still meeting strict compliance and SLA requirements. In manufacturing, teams now ingest millions of IoT data points per hour into PostgreSQL-powered dashboards that drive real-time operational decisions.

Where enterprises often struggle

Despite its strengths, PostgreSQL adoption at enterprise scale can falter without the right approach. Common pitfalls include:

- Performance stalls from unoptimized hardware, misconfigured memory, or missing indexes.

- Security blind spots due to default permissions and lack of auditing.

- Operational fatigue from manual failovers, backup complexity, or lack of automation.

- Replica lag affecting real-time analytics and reporting.

- Skills and process gaps in long-term maintenance, upgrades, and support models.

These are not limitations of PostgreSQL itself, but symptoms of under-resourced or misaligned implementations.

How we help

| Business Needs | Legacy Platform Pitfalls | Postgres with Cazton's Guidance |

| License & TCO | Per-core/instance fees, inflexible terms | Open, transparent cost model; pay for infra |

| Scaling | Expensive hardware upgrades | Commodity hardware, replicas, partitioning |

| Upgrades | Months-long windows, forced downtime | Rolling & logical upgrades, minimal impact |

| Integration | Proprietary APIs, code refactoring | Standards-based, wide interoperability |

| Observability | Costly premium tools | Built-in analytics, open ecosystem tools |

| Cloud readiness | Single-vendor lock-in | Native hybrid/multi-cloud support |

Our role is to bridge the gap between PostgreSQL’s capabilities and your business objectives. We bring proven frameworks for:

- Smooth, low-risk migrations from legacy platforms.

- Performance tuning and HA configurations tailored to your workload.

- Ongoing support, governance, and enablement so your teams are equipped for long-term success.

With the right expertise, PostgreSQL becomes more than just a cost-saving alternative; it becomes a resilient, future-ready foundation for innovation, analytics, and AI.

The real power of PostgreSQL emerges when these components work together in enterprise environments. For example, a major financial services company leveraged PostgreSQL's JSON capabilities to modernize their customer data platform, reducing query response times while maintaining strict ACID compliance for transaction processing.

Unlike commercial databases that require expensive add-ons for advanced features, PostgreSQL includes sophisticated capabilities like full-text search, geographic data processing, and time-series analysis as standard features. This architectural approach eliminates the need for costly third-party solutions while providing a unified platform for diverse data workloads.

Our team understands how to configure and optimize these components for your specific use cases, ensuring you realize the full potential of PostgreSQL's capabilities while maintaining the performance and reliability of your business demands.

Using AI to Improve Decisions Across Your Enterprise

AI isn't just about complex models or futuristic use cases; it’s about delivering smarter, more adaptive experiences using the data your business already generates. PostgreSQL, when extended with the right capabilities, enables teams to embed intelligence where it matters most: inside the operational flow.

A professional services firm approached us looking to improve internal search and knowledge sharing across thousands of project documents, chat transcripts, and support logs. Their challenge wasn’t a lack of data; it was surfacing the right insight at the right moment for consultants, sales teams, and support staff.

We helped them design an intelligent document discovery system where content embeddings were stored directly in PostgreSQL using pgvector. Instead of tagging every file manually or relying on rigid keyword search, the system now recommends similar documents, previous case studies, or relevant conversations based on natural language queries, all within the internal tools their teams already use.

We worked closely to shape not just the data layer, but also the search experience and the workflows that powered it. The result? Less time searching, more time applying insights and a measurable uptick in cross-team collaboration and productivity.

Big Data Solutions That Actually Scale

PostgreSQL has become a dependable choice for managing high-volume, high-velocity data at scale. When designed correctly, it can serve as the core engine for streaming ingestion, large-scale processing, and near real-time analytics making it ideal for modern big data platforms without relying on expensive proprietary tools.

In one project, a global telecom company needed to track and analyze billions of network activity logs across multiple regions. Traditional batch pipelines couldn’t keep up, and ad hoc reporting was too slow to meet operational response times. We helped them architect a centralized data platform where PostgreSQL handled live ingestion of structured logs, paired with Apache Kafka and Spark for distributed processing and enrichment.

We designed partitioned data models optimized for write-heavy operations and long-term retention. PostgreSQL was integrated with their existing Microsoft Fabric and Databricks pipelines to support downstream analytics, while maintaining fast, SQL-based access to operational metrics for engineering and customer service teams.

With our help, they moved from delayed visibility to real-time insight, reduced their reliance on disconnected tools, and created a scalable foundation for future analytics initiatives, all while keeping PostgreSQL at the center of their architecture.

How You Drive Real Business Outcomes with PostgreSQL

Your enterprise might be dealing with legacy systems, regional complexity, or specialized compliance needs. PostgreSQL works best when treated as a foundation for tailored solutions. We’ve helped clients migrate from mainframes, orchestrate multi-region rollouts, and integrate streaming or analytics workflows.

By designing modular architectures and rolling them out in phases, we help reduce risk, ensure compliance, and support innovation without disruption. Whether it's handling custom extensions or aligning with audit requirements across business units, we build what fits your structure, not a generic model.

Off-the-shelf data platforms often fall short when your organization faces deep operational complexity. Whether you’re integrating legacy systems, navigating industry regulations, or scaling across regions, PostgreSQL offers the flexibility to shape your real needs, not force your structure into a rigid model.

We’ve worked with enterprises that needed more than just a working database. They needed architecture that supports transformation without disruption. In these environments, we apply modular design and phased rollouts, always aligned with business risk, compliance, and long-term sustainability.

Here are some of the challenges we help you solve:

- Migrating from legacy mainframes or converting proprietary data formats

- Orchestrating coordinated rollouts across multi-region or multi-cloud environments

- Integrating event-driven and analytics workflows (IoT, real-time apps, search, etc.)

- Making informed build-vs-buy decisions for custom extensions and tooling

- Maintaining audit trails across decentralized or regulated business units

With the right approach, PostgreSQL becomes more than a database; it becomes a durable, tailored foundation for innovation across your enterprise.

Build Your Competitive Data Advantage

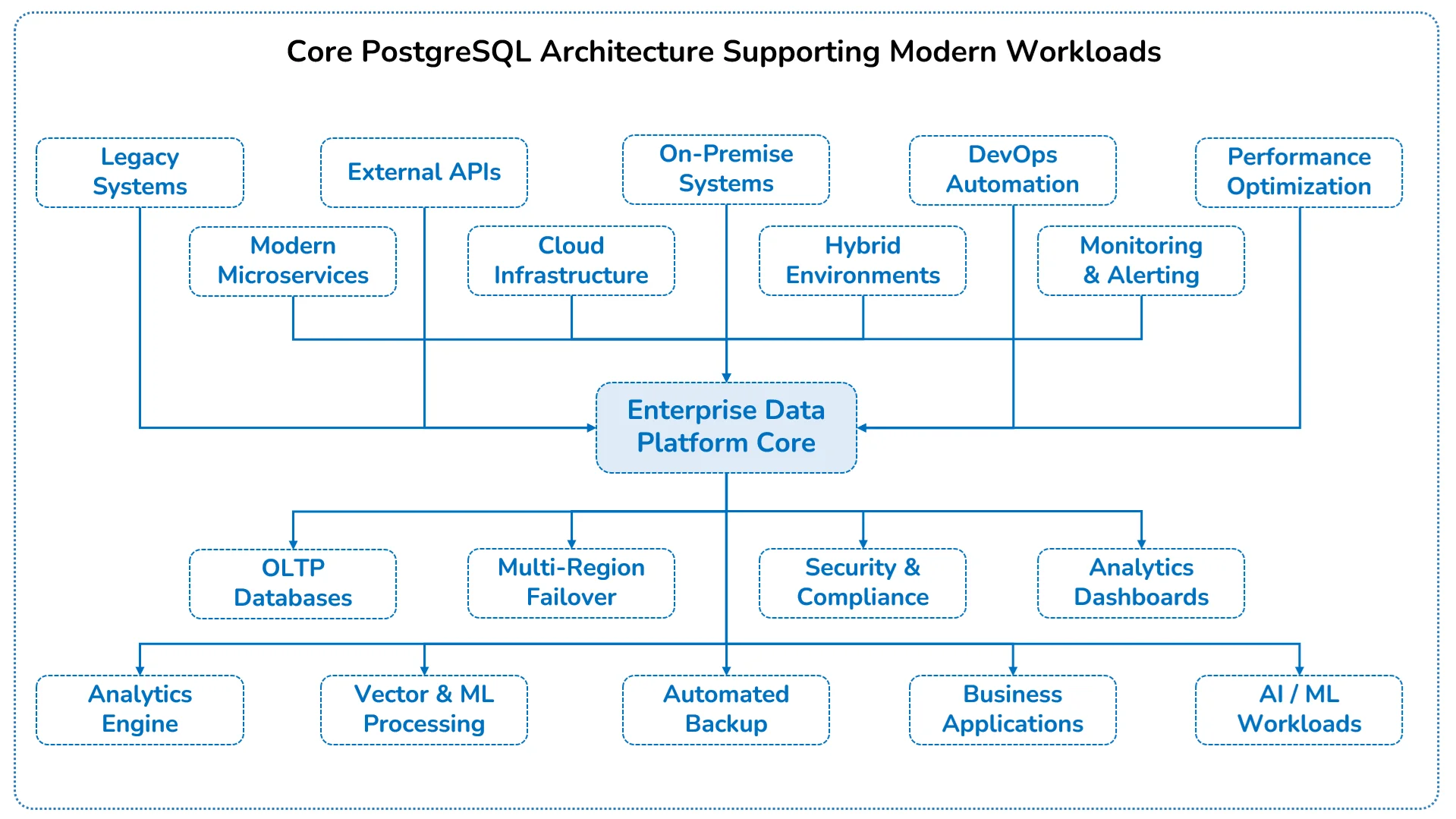

Enterprise data platforms must evolve with your business while maintaining the reliability and performance that critical operations demand. The difference between a tactical database implementation and a strategic data platform lies in the architectural decisions made during initial design and deployment.

Building for enterprise means planning for scale, security, and change. Our designs bring together proven components that create robust, adaptable data foundations:

- Resilient infrastructure architecture: Multi-region failover capabilities with automated cluster orchestration to ensure your data remains accessible even during infrastructure failures. Our implementations include sophisticated load balancing, connection pooling, and automatic recovery mechanisms that minimize downtime and maintain performance under varying load conditions.

- Comprehensive data governance: Role-based security frameworks with granular access controls create audit-lined trust throughout your organization. We implement field-level encryption, automated compliance reporting, and detailed access logging that satisfies regulatory requirements while enabling productive data collaboration across teams.

- Extensible platform design: Vector data processing, geospatial analytics, and machine learning enablement are built into the platform architecture from day one. This approach eliminates the need for costly add-ons or system replacements as your analytical requirements evolve and expand.

- Seamless legacy integration: Migration strategies that preserve business context while modernizing underlying infrastructure. Our approach ensures that existing applications continue operating during transition periods while new capabilities are gradually introduced without disrupting ongoing operations.

- Intelligent automation framework: Managed automation for backup procedures, failover orchestration, and lifecycle maintenance reduces operational overhead while improving reliability. Automated monitoring and alerting systems provide proactive issue detection and resolution before problems impact business operations.

Our teams don't just "connect" systems; they ensure business context is preserved, performance is tunable, and every integration becomes a buried foundation for growth. This architectural philosophy creates data platforms that adapt to changing requirements while maintaining the stability and performance that enterprise operations require.

The result is a data platform that grows with your business, supports emerging technologies, and provides the foundation for future innovation while maintaining the reliability and security that enterprise operations demand.

Case Studies

Customer Service Acceleration

- Challenge: High-volume customer service organizations struggle when critical account history, order details, or resolution tools are scattered across legacy systems and manual spreadsheets. Support staff spend vital time toggling between apps or requesting records, risking slow, impersonal service and frustrated clients.

- Solution: By centralizing customer, payment, and ticket data within Postgres, we deliver a platform where every support portal instantly retrieves relevant history, escalation notes, and available actions. Strong API integration and permissioned data access ensure the right context always flows, while minimizing manual lookups and system hops.

- Business impact: Support reps handle more inquiries per hour with greater personalization, improving first-call resolution rates, and customer retention. Leaders see rising CSAT scores and scale service rapidly during seasonal peaks, without degrading the experience.

- Tech stack: Postgres, React.js, Node.js, Redis, Elasticsearch, OpenSearch, Kubernetes, Hugging Face Transformers, OpenAI, LangChain, Evals, MCP, Docker, Jenkins.

Financial Workflow Orchestration

- Challenge: Finance teams often juggle complex settlements, regulatory checks, fraud investigations, and external audits. Rigid legacy tools and point-to-point integrations require manual data pulls and spreadsheet reconciliation, draining productivity and raising the risk of missed deadlines or errors.

- Solution: With Postgres acting as the canonical ledger for all transactions and compliance events, users and automation layers access a unified view. Real-time triggers, updatable views, and integrated processes mean reconciliation and reporting workflows evolve quickly with regulation or market need, never waiting on third parties.

- Business impact: Month-end and audit cycles shrink, fraud detection can run in real time, and compliant changes to data policies are reflected instantly across the stack. Costs from errors, double handling, and system shadow IT are materially reduced, while responsiveness to regulation and audit is improved.

- Tech stack: Postgres, Angular, .NET, Kafka, Azure, Azure OpenAI, Azure AI, Airflow, TensorFlow, Databricks, Apache NiFi, Docker, Datadog

Enterprise Analytics Enablement

- Challenge: Business analysts report that extracting timely intelligence is slowed by fragmented tools, siloed warehouses, and scattered spreadsheets. Manual extracts consume hours, and key decision-makers lack unified dashboards.

- Solution: We deliver Postgres-based analytics environments with curated data marts, historical and operational tables, and rapid reporting SQL endpoints. Analysts self-serve secure, governed models, reducing dependency on ad hoc IT exports, while ELT process pipelines are monitored and versioned as part of the platform.

- Business impact: Decision-makers gain access to real-time, holistic dashboards. Analysts focus on insight generation and forecasting rather than wrestling with data extracts, accelerating business response and ongoing performance measurement.

- Tech stack: Postgres, Vue.js, Power BI, Python, Spark, Snowflake, TensorFlow, Pytorch, Microsoft Fabric, Grafana, Prefect, GCP, Apache SOLR.

Supply Chain Agility and Intelligence

- Challenge: Enterprises with complex supply chains often rely on disconnected systems across inventory, vendors, and logistics. This leads to delayed responses to market disruptions, overstocking, or routing inefficiencies.

- Solution: Consolidating supply chain data into a real-time planning and monitoring environment provides a single view across inventory, vendor, and transport systems. Secure APIs and event-driven updates keep all stakeholders aligned with the latest status and reduce reliance on reactive firefighting.

- Business impact: Planning becomes proactive, inventory accuracy improves, and teams respond faster to real-world conditions. Cost overruns and delivery delays are reduced, and fulfillment becomes more resilient and predictive.

- Tech stack: Postgres, PostGIS, React Native, Java Spring Boot, Kafka, Spark, Cassandra, Apache Flink, Airbyte, Prometheus, AWS, OpenAI, LangChain.

Your PostgreSQL Success Roadmap

Successful PostgreSQL adoption requires a phased approach that aligns with your business objectives while minimizing operational disruption. Our methodology ensures that each implementation phase delivers measurable value while building toward your long-term digital transformation goals.

Phase 1: Assessment and Planning

- Comprehensive analysis of current database infrastructure

- Identification of migration priorities and risk factors

- Development of detailed implementation timeline

- Establishment of success metrics and performance benchmarks

Phase 2: Pilot Implementation

- Deployment of PostgreSQL in controlled environment

- Migration of selected non-critical applications

- Performance testing and optimization

- Staff training and knowledge transfer

Phase 3: Production Deployment

- Migration of business-critical applications

- Implementation of monitoring and alerting systems

- Establishment of backup and disaster recovery procedures

- Go-live support and performance optimization

Phase 4: Optimization and Scaling

- Continuous performance monitoring and tuning

- Capacity planning and infrastructure scaling

- Advanced feature implementation

- Ongoing staff development and best practices training

Governance and capability building: Each phase includes comprehensive documentation, training programs, and knowledge transfer activities that ensure your team can effectively manage and maintain the PostgreSQL environment. We establish governance frameworks that maintain system performance while enabling your organization to adapt to changing business requirements.

Our roadmap approach ensures that PostgreSQL becomes a strategic asset that supports your organization's growth and innovation objectives while delivering immediate operational benefits.

How Cazton Can Help You With PostgreSQL

PostgreSQL represents a transformative opportunity for enterprises seeking to reduce database costs while improving performance and capabilities. However, realizing this potential requires expert guidance and proven implementation methodologies that address the unique challenges of enterprise-scale deployments.

Our team brings deep PostgreSQL expertise combined with extensive experience in enterprise transformation projects. We understand that successful database modernization goes beyond technical implementation to encompass change management, staff training, and ongoing optimization that ensures long-term success.

Here are our offerings:

- Enterprise-grade scalability planning: We architect PostgreSQL deployments that can support exponential data growth, high-concurrency workloads, and multi-terabyte to petabyte-scale operations, ensuring your environment scales seamlessly with business demand.

- Performance optimization consulting: Our experts fine-tune PostgreSQL environments for optimal speed, throughput, and efficiency, ensuring your most critical workloads always run at peak performance.

- High availability architecture design: We implement failover-ready, disaster-resilient architectures that safeguard uptime and protect your data from unexpected disruptions.

- Security hardening and compliance: We harden PostgreSQL deployments with enterprise-grade security practices aligned to your industry’s compliance needs, from encryption to access control.

- PostgreSQL migration services: We lead smooth transitions from legacy databases to PostgreSQL, ensuring zero data loss and minimal downtime throughout the migration lifecycle.

- Custom extension development: We build custom PostgreSQL extensions to meet your unique business logic, data models, or integration challenges.

- Cloud-native PostgreSQL deployments: We architect optimized PostgreSQL environments on AWS, Azure, and GCP, delivering cloud-native performance with portability and control.

- Enterprise integration solutions: We connect PostgreSQL with your existing systems, APIs, and service layers, streamlining workflows and data access across the business.

- Training and knowledge transfer programs: We equip your internal teams with hands-on training and guided knowledge transfer to ensure long-term self-sufficiency and confidence with PostgreSQL.

- Ongoing optimization and scaling: We stay engaged to evolve your PostgreSQL platform, ensuring performance, cost-efficiency, and scalability while keeping up with your business goals.

The decision to adopt PostgreSQL shouldn't be whether it's right for your organization, but rather how quickly you can capture its benefits while avoiding the pitfalls of inadequate implementation. Our methodologies and deep expertise ensure your PostgreSQL transformation delivers immediate value while positioning your organization for future growth and innovation.

Contact us today to schedule a comprehensive assessment of your current database infrastructure and learn how PostgreSQL can transform your organization's data capabilities while reducing costs and improving performance.

Cazton is composed of technical professionals with expertise gained all over the world and in all fields of the tech industry and we put this expertise to work for you. We serve all industries, including banking, finance, legal services, life sciences & healthcare, technology, media, and the public sector. Check out some of our services:

- Artificial Intelligence

- Big Data

- Web Development

- Mobile Development

- Desktop Development

- API Development

- Database Development

- Cloud

- DevOps

- Enterprise Search

- Blockchain

- Enterprise Architecture

Cazton has expanded into a global company, servicing clients not only across the United States, but in Oslo, Norway; Stockholm, Sweden; London, England; Berlin, Germany; Frankfurt, Germany; Paris, France; Amsterdam, Netherlands; Brussels, Belgium; Rome, Italy; Sydney, Melbourne, Australia; Quebec City, Toronto Vancouver, Montreal, Ottawa, Calgary, Edmonton, Victoria, and Winnipeg as well. In the United States, we provide our consulting and training services across various cities like Austin, Dallas, Houston, New York, New Jersey, Irvine, Los Angeles, Denver, Boulder, Charlotte, Atlanta, Orlando, Miami, San Antonio, San Diego, San Francisco, San Jose, Stamford and others. Contact us today to learn more about what our experts can do for you.