AI Agent Best Practices

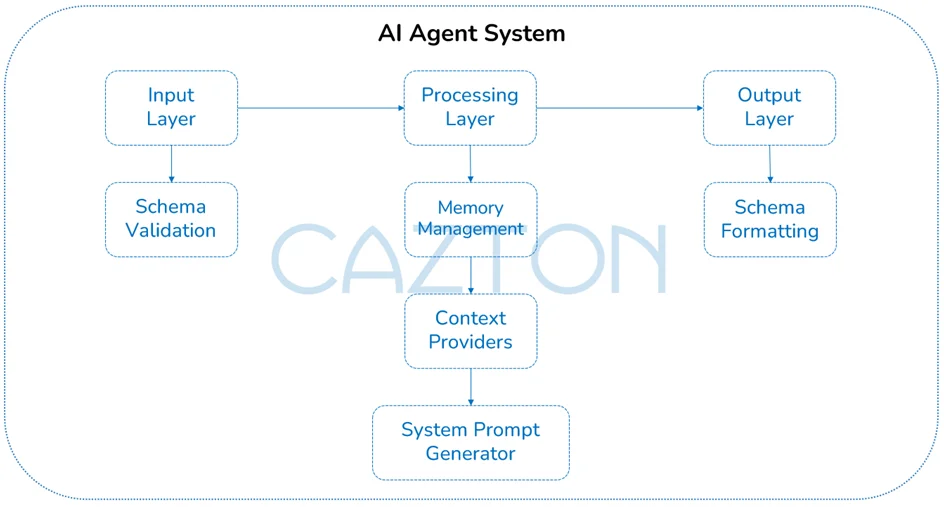

- Core Components: Learn how input/output schemas, memory management, and system prompts form the backbone of effective AI agents.

- Memory Strategies: Explore how short, medium, and long-term memory tiers improve personalization and conversation flow.

- Perfecting Prompts: Discover how well-crafted system prompts define an agent's role, tone, boundaries, and response behavior.

- Context Providers: Understand how modular context layers (like user preferences or real-time data) make responses smarter and more relevant.

- Team of experts: Our team is composed of PhD and Master's-level experts in data science and machine learning, award-winning Microsoft AI MVPs, open-source contributors, and seasoned industry professionals with years of hands-on experience.

- Microsoft and Cazton: We work closely with OpenAI, Azure OpenAI and many other Microsoft teams. We are fortunate to have been working on LLMs since 2020, a couple years before ChatGPT was launched. We are grateful to have early access to critical technologies from Microsoft, Google and open-source vendors.

- Top clients: We help Fortune 500, large, mid-size and startup companies with Big Data and AI development, deployment (MLOps), consulting, recruiting services and hands-on training services. Our clients include Microsoft, Google, Broadcom, Thomson Reuters, Bank of America, Macquarie, Dell and more.

Introduction

In today's rapidly evolving technological landscape, AI agents have emerged as powerful tools that can transform how we interact with digital systems. These intelligent software entities can understand user requests, process information, and take actions to accomplish specific goals. Whether you're building a customer service chatbot, a research assistant, or a complex decision-making system, understanding the fundamental principles and best practices of AI agent development is crucial for success.

AI agents represent the next evolution in human-computer interaction, moving beyond simple command-response patterns to create more natural, contextual, and helpful digital assistants. At their core, these agents combine natural language understanding, memory management, and specialized knowledge to deliver personalized experiences. However, creating effective AI agents requires careful planning, thoughtful design, and adherence to established patterns that ensure reliability, scalability, and user satisfaction.

This article explores the essential concepts, patterns, and practices for building high-quality AI agents. We'll break down complex ideas into digestible explanations and provide real-world examples to illustrate how these principles apply in practice. Whether you're an AI engineer, a technical executive, or a developer looking to enhance your applications with intelligent capabilities, this guide will help you navigate the challenges and opportunities in AI agent development.

Core Components of AI Agents

Input/Output Schemas

At the foundation of any well-designed AI agent is a clear definition of how information flows into and out of the system. Input/output schemas provide a structured way to define what data an agent accepts and what it returns. Think of schemas as contracts between your agent and the outside world - they ensure that communication happens in a predictable, consistent way.

Good schemas define not just the data types but also include descriptions and validation rules. For example, an input schema might specify that a user message must be a string with a maximum length, while an output schema could define that responses include both text and confidence scores. By enforcing these structures, you create more reliable agents that can handle edge cases gracefully.

Real-world example: A healthcare scheduling assistant uses input schemas to validate appointment requests, ensuring they include necessary information like preferred date ranges, reasons for visits, and insurance details. The output schema guarantees that responses always include available time slots, provider information, and preparation instructions - creating a consistent experience for patients regardless of their specific request patterns.

Memory Management

AI agents need memory to provide contextual, personalized experiences. Without memory, every interaction becomes isolated, forcing users to repeat information and losing the natural flow of conversation. Effective memory management involves storing relevant details from past interactions while discarding irrelevant or outdated information.

The best AI agents implement tiered memory systems that distinguish between short-term conversation context (what was just discussed), medium-term session information (what has been established in the current interaction), and long-term user preferences or history. This approach allows agents to maintain coherent conversations while also recalling important details from past interactions when relevant.

Real-world example: A financial advisory agent remembers not just the current conversation about retirement planning but also recalls that in previous sessions, the user mentioned having two children planning for college and a risk-averse investment style. When making recommendations, it automatically factors in these previously established preferences without requiring the user to restate them, creating a more personalized and efficient experience.

System Prompts

System prompts serve as the foundational instructions that shape an AI agent's behavior, knowledge, and personality. These carefully crafted directives act as the "operating manual" for the agent, establishing its purpose, constraints, and approach to handling user interactions. A well-designed system prompts strike a balance between being specific enough to guide the agent effectively while remaining flexible enough to handle diverse user needs.

The most effective system prompts include clear definitions of the agent's role, guidelines for tone and style, boundaries of expertise, and instructions for handling edge cases. They often incorporate examples of ideal responses and explicitly state what the agent should avoid. By investing time in refining system prompts, developers can dramatically improve agent performance without changing the underlying model.

Real-world example: A customer service agent for an e-commerce platform uses a system prompt that defines its primary goal as resolving customer issues efficiently while maintaining a friendly, empathetic tone. The prompt includes specific instructions for handling common scenarios like returns, damaged items, and shipping delays, along with examples of how to acknowledge customer frustration appropriately. It also explicitly directs the agent to avoid making promises about delivery dates it cannot guarantee and to escalate complex issues to human representatives when necessary.

Context Providers

Context providers are specialized components that supply AI agents with relevant information beyond what the user explicitly states. These modules help bridge the gap between what users say and what they need by injecting additional context that makes responses more helpful, accurate, and personalized.

By implementing modular context providers, you can enhance your agent with different types of information - from user preferences and interaction history to real-time data from external systems. The key is designing these providers to deliver just the right amount of context at the right time, avoiding information overload while ensuring the agent has what it needs to respond effectively.

Real-world example: A travel planning assistant uses multiple context providers to enhance its recommendations. When a user asks about weekend getaways, the location provider adds the user's current city and time zone, the preference provider adds information about their past travel choices (preferring outdoor activities and boutique hotels), and the seasonal provider adds details about current weather patterns and local events. With this enriched context, the agent can suggest highly relevant destinations without requiring the user to specify all these parameters explicitly.

Advanced Patterns for AI Agents

Custom Schemas for Specialized Interactions

While basic text-in and text-out interactions work for simple use cases, more complex applications benefit from custom input and output schemas. These specialized data structures allow agents to handle structured information more effectively, enabling richer interactions and more precise control over agent behavior.

Custom schemas can define specific fields for different types of requests, including validation rules to ensure data quality, and structure outputs in ways that make them easier to process programmatically. This approach creates a bridge between the flexibility of natural language and the precision of structured data, giving you the best of both worlds.

Real-world example: A recipe recommendation agent uses custom input schemas that allow users to specify dietary restrictions, available ingredients, cooking time constraints, and skill level - all as structured fields rather than trying to extract this information from free text. Its output schema returns not just the recipe text, but also structured data about nutrition information, preparation steps, required equipment, and possible substitutions. This structured approach makes it easy to filter and display results in a cooking app while still allowing natural conversation about recipe preferences.

Streaming Responses

Users expect responsive interactions, but complex queries can take time to process. Streaming responses solve this problem by sending partial results as they become available rather than waiting for the complete answer. This approach creates a more engaging experience and gives users immediate feedback that their request is being processed.

Implementing streaming effectively requires careful attention to how information is chunked and presented. The goal is to provide meaningful, coherent pieces that build toward the complete response rather than disjointed fragments. This often means designing your agent to structure its thinking in a way that produces useful intermediate outputs.

Real-world example: A research assistant agent that helps analyze scientific papers streams its response as it works through complex questions. When asked to summarize the key findings and limitations of a study, it first acknowledges the request, then streams sections of its analysis as they're completed - first the study overview, then key findings, then limitations, and finally implications. This approach keeps the user engaged during what might otherwise be a long wait and allows them to begin processing the information before the complete analysis is finished.

Error Handling and Graceful Degradation

Even the best AI agents will encounter situations they can't handle perfectly. What separates excellent agents from mediocre ones is how they manage these edge cases. Effective error handling means recognizing when the agent is operating outside its capabilities and responding appropriately rather than providing misleading or unhelpful information.

Graceful degradation involves designing your agent to maintain usefulness even when ideal conditions aren't met. This might mean providing partial answers when complete ones aren't possible, clearly communicating limitations, or falling back to simpler but more reliable approaches when sophisticated ones fail.

Real-world example: A legal research assistant agent is designed to help lawyers find relevant case law. When asked about a very recent ruling that isn't in its knowledge base, instead of attempting to fabricate details, it clearly acknowledges the limitation: "I don't have information about rulings after my last update in March 2023. However, I can help you understand the precedents leading up to this case and the relevant legal principles that would likely apply. Would that be helpful while you seek a specific ruling elsewhere?" This honest approach maintains trust and still provides value despite limitations.

Implementation Strategies

Modular Design

Building AI agents as collections of specialized components rather than monolithic systems creates more flexible, maintainable solutions. Modular design allows you to update individual parts of your agent without disrupting the whole process, test components in isolation, and reuse successful elements across different agents.

The key to effective modularity is defining clean interfaces between components and ensuring each module has a single, well-defined responsibility. This approach might seem more complex initially but pays dividends as your agent evolves and grows capability.

Real-world example: A customer support agent is built with separate modules for authentication, issue classification, knowledge retrieval, response generation, and sentiment analysis. When the company updates its product lineup, only the knowledge retrieval module needs updating. When they want to improve how the agent handles frustrated customers, they can focus exclusively on enhancing the sentiment analysis module. This targeted approach makes maintenance more efficient and allows specialized team members to work on components that match their expertise.

Testing and Evaluation

Thorough testing is essential for creating reliable AI agents, but traditional software testing approaches aren't always sufficient. Effective agent testing combines automated checks with human evaluation to ensure both technical correctness and subjective quality.

A comprehensive testing strategy includes unit tests for individual components, integration tests for component interactions, scenario-based tests for common user journeys, adversarial testing to identify vulnerabilities, and regular human evaluation of real conversations. This multi-layered approach helps catch issues that might be missed by any single testing method.

Real-world example: Before launching a financial advisory agent, a company implements a testing program that includes automated checks for regulatory compliance (ensuring the agent never makes promises about investment returns), scenario testing with hundreds of common financial questions, and a panel of financial advisors who review sample conversations to evaluate the quality of advice. They also implement a "red team" approach where staff deliberately try to trick the agent into giving inappropriate recommendations. This comprehensive testing identifies several edge cases where the agent needs improvement before it can be safely deployed.

Continuous Improvement

The most successful AI agents evolve over time based on real-world usage and feedback. Establishing systems for monitoring performance, collecting user feedback, and iteratively improving your agent ensures it becomes more valuable and effective with each interaction.

Effective continuous improvement requires both quantitative metrics (like task completion rates and user satisfaction scores) and qualitative insights from conversation reviews. The goal is to identify patterns in where and how your agent succeeds or struggles, then make targeted improvements to address the most impactful issues first.

Real-world example: An HR assistant agent that helps employees navigate benefits and company policies includes a simple feedback mechanism after each interaction. The development team reviews this feedback weekly, categorizing issues and identifying trends. They notice that questions about parental leave policies frequently receive low satisfaction ratings, so they enhance the agent's knowledge and responses in this specific area. After deployment, they observe a 40% improvement in satisfaction for parental leave queries while maintaining performance in other areas. This targeted approach allows them to make meaningful improvements with limited resources.

Additional Considerations

As AI agents mature and transition from experimentation to enterprise deployment, the technical foundations covered earlier must be complemented by broader strategic concerns. These considerations ensure your agents remain trustworthy, adaptable, and effective at scale - especially in environments with real users, regulatory constraints, and evolving business requirements.

Ethical Guidelines and Safeguards

Beyond technical implementation, responsible AI agent development requires establishing clear ethical boundaries and safeguards. Consider implementing explicit content policies, bias detection and mitigation strategies, and mechanisms for handling sensitive user information. Creating an ethics review process for your agent's capabilities and responses can help identify potential issues before they affect users.

Multi-modal Interactions

While text-based interactions form the foundation of many AI agents, expanding to support multiple modalities - like voice, images, or video - can create more accessible and natural experiences in many contexts. Consider how your agent architecture might evolve to support these additional channels while maintaining consistent behavior and capabilities across modalities.

Handoff Mechanisms

Even the most capable AI agents will encounter situations beyond their abilities. Designing thoughtful handoff mechanisms to human operators or specialized systems ensures users aren't left frustrated when they reach the limits of automated assistance. Clear indicators of when handoffs are occurring and smooth transitions between automated and human support create better overall experiences.

Personalization vs. Privacy

Finding the right balance between personalized experiences and user privacy presents ongoing challenges. Consider implementing tiered personalization that allows users to control how much information the agent remembers about them, transparent data policies that clearly explain how user information is used, and mechanisms for users to review and delete their interaction history.

Deployment and Scaling Considerations

As your agent moves from prototype to production, consider how it will scale to handle increasing load, maintain performance during usage spikes, and evolve without disrupting existing users. Implementing proper monitoring, staged rollouts of new capabilities, and fallback mechanisms for when components fail can help ensure reliability as your agent grows in usage and complexity.

Conclusion

AI agents represent a powerful paradigm for creating more intelligent, helpful, and natural digital experiences. By implementing the best practices outlined in this article - from thoughtful schema design and memory management to effective system prompts and modular architecture - you can create agents that truly enhance how users interact with your systems and services.

The most successful AI agents balance technical sophistication with human-centered design, ensuring that advanced capabilities serve real user needs rather than existing for their own sake. They're built with clear purposes, appropriate constraints, and mechanisms for continuous improvement based on real-world usage.

As AI technology continues to evolve, the fundamental principles of good agent design remain consistent: clarity of purpose, thoughtful structure, appropriate context, and a focus on delivering genuine value to users. By keeping these principles at the center of your development process - and layering in ethical design, multimodal thinking, privacy controls, and resilient infrastructure - you’ll create agents that are not just functional, but future-ready.

Made it this far? See an agent in action. Book a private demo and let it speak for itself.

Cazton is composed of technical professionals with expertise gained all over the world and in all fields of the tech industry and we put this expertise to work for you. We serve all industries, including banking, finance, legal services, life sciences & healthcare, technology, media, and the public sector. Check out some of our services:

- Artificial Intelligence

- Big Data

- Web Development

- Mobile Development

- Desktop Development

- API Development

- Database Development

- Cloud

- DevOps

- Enterprise Search

- Blockchain

- Enterprise Architecture

Cazton has expanded into a global company, servicing clients not only across the United States, but in Oslo, Norway; Stockholm, Sweden; London, England; Berlin, Germany; Frankfurt, Germany; Paris, France; Amsterdam, Netherlands; Brussels, Belgium; Rome, Italy; Sydney, Melbourne, Australia; Quebec City, Toronto Vancouver, Montreal, Ottawa, Calgary, Edmonton, Victoria, and Winnipeg as well. In the United States, we provide our consulting and training services across various cities like Austin, Dallas, Houston, New York, New Jersey, Irvine, Los Angeles, Denver, Boulder, Charlotte, Atlanta, Orlando, Miami, San Antonio, San Diego, San Francisco, San Jose, Stamford and others. Contact us today to learn more about what our experts can do for you.