How Costly Is Cheap Code?

At times, software development can be easy, but to make your software perform and scale can be a daunting task. Performance and scalability is not just about writing any code, but to write code that fully utilizes the capabilities of the underlying infrastructure. Having a powerful hardware infrastructure is always an advantage, but it needs experience and skill to write code that utilizes it and performs and scales well. Have you ever encountered SQL Server code with more than 50 joins in a query? Or code that retrieves millions of records from SQL Server to the Web Server just to return one record to the UI? These are the kind of scenarios we deal with on a daily basis. We have helped scale applications with more than a billion hits per day and increased performance exponentially.

Why is scalability so important?

Amazon claims that just an extra 1/10th of a second on their response times can cost them 1% in sales and according to Google, half a second increase in latency caused traffic to drop by a fifth. You can now imagine how important it is for these businesses to keep their systems up and running full time. We have seen companies suffer huge losses by losing a huge customer base due to performance and scalability issues. It is important to identify failures, bottlenecks and downtimes and provide a solution before they start affecting your business. With the advent of cheaper internet plans and devices, modern technological solutions require millions of hits with zero downtime. The solution needs to consider the hardware and software platform, application design, caching strategies, asynchronous processing, deployment, monitoring mechanisms and the list goes on.

There are a lot of variables to be considered while designing a scalable solution:

- Number of users per session the entire system can handle.

- Number of concurrent transactions or operations the entire system can handle.

- Optimal utilization of hardware resources.

- Time taken per transaction or operation.

- Availability of the application at any given time.

- Impact of the downtime on the users.

One cannot rely on a single system for its operations. A multi-node system is essential and so the network partitioning is always required and has to be tolerated. The system designers need to make a decision to provide either consistency or availability to the user in case of a failure in a multi-partition network system. As quoted by Gilbert and Lynch,

No set of failures less than total network failure is allowed to cause the system to respond incorrectly.

Dealing with a distributed computing environment has its benefits. However, we should be wary of the following fallacies:

- Reliable network.

- Zero latency.

- Infinite bandwidth.

- Network is secure.

- No change to network topology.

- Single point of administration.

- Zero transport costs.

- Homogeneous network.

- Location is irrelevant.

Success Story

One such success story that we would like to share is of a client that was using Python and Django for building a web application. The company was using Web APIs written in .NET Core, Java as well as Python in a Microservices environment. They had integrated Elasticsearch and other related libraries for implementing search functionality in their application. The indexed data had grown to terabytes of data into a multi-clustered environment. Some API queries had to go through multiple internal services and were taking as long as 23 seconds making the application almost unusable. Python, being an interpreted and dynamically typed language, offers easy code development. However, it fails to offer performance owing to its nature of execution. Python libraries could be used to increase speed of development, though it may not outperform a compile time language like C# or Java in most cases. C# and Java, are both compile time and strongly typed languages. They are better in comparison to Python in terms of performance and offer superior compile time checks.

To start with, our experts performed a full audit of the client's existing system and came up with a report that explained the strengths and weaknesses of their existing system and overall infrastructure. They found that the search engine database indexing task was not very efficient. They were also using JSON to transfer data within in-network services. JSON is great for the Web because of JavaScript but highly inefficient in this scenario. Additionally, they found some incorrect implementation of Microservices patterns. The benefit of a Microservices model is being able to rewrite a small functionality and deploy it independently. This way the rest of the system is hardly impacted. We improved performance on data transfer between microservices and utilized async and multi-threading capabilities of .NET Core to do the same task 36.5x efficiently and helped them save approximately 90% of their expenses. There were some other performance improvements made that included improving data retrieval and transfer algorithms, changing data formats, making schema more efficient and tailoring solutions towards efficiency and performance. Furthermore, we ported the code to the cloud solution provider for faster access to resources, gaining another 20%. Overall we optimized web performance by 821x reducing the execution time from 23 seconds to just 28 milliseconds.

One of the biggest challenges in software development is taking a small piece of information and jumping to conclusions based on it. For example, just reading the previous paragraph you may lean towards using .NET Core instead of Python and expect similar results. However, that's just a small piece of the puzzle. The more important aspect is the right use of asynchronous and multithreading capabilities. While it may seem straightforward, using them in conjunction requires a combination of both art and science that only comes with experience. Our team works with clients world-wide and so we are fortunate to have dealt with many challenges and problems that have provided us with the necessary experience. We know which approaches are the most fruitful and how to tackle even the trickiest problems. Each company has different priorities and constraints, so solutions are not one size fits all. While some teams can throw any amount of money, people and hardware at a problem, other teams (sometimes in the same company) may not have the luxury to do so. Every client has different constraints, goals and deadlines. We have learned to pivot with agility and this is of immense value to our clients and especially their budgets.

Continue reading to learn more about the increased performance metrics.

Hardware Details:

- Dedicated server details:

- 64-bit operating system - 32 GB RAM, 8 core processor windows server.

- Azure Cloud VM details:

- Standard_B8ms - 8 vCPUs - 32 GB RAM with additional managed standard HDD space.

Metrics: Calculations have been provided at the bottom of this blog post.

Taking into account the above metrics, the client now saved approximately 90% expenses for the same operation. This way the client not only saved money, but also benefited with reliability, fault tolerance, high performance and faster execution speed. Our experts helped the client to further cut down expenses by implementing an automation pipeline and containerizing the operation and the web application.

Over the years, Cazton has expanded into a global company, servicing clients across the United States, Canada, Norway, Sweden, England, Germany, France, Netherlands, Belgium, Italy, Australia and India. Our experts have been providing successful high-quality solutions for a wide spectrum of projects including artificial intelligence, big data, Web, Mobile, Desktop, API and Database development, Cloud, DevOps, Enterprise Search, Blockchain, Enterprise Architecture. We have served all industries, including banking & investment services, finance and mortgage; fintech, legal services, life sciences & healthcare, construction, chemical industries, hotel industries, transport & tourism, commerce, technology, media, e-commerce, telecom, airlines, logistics and supply chain. Besides receiving a huge number of requests for Software development, training and recruiting, clients have preferred us over our competitors for solving their Software performance and scalability problems. Contact us today to learn more about what our experts can do for you.

Calculations:

- Gains on web performance improvement from 23 seconds to 28 milliseconds. There was an 821x improvement.

- Metrics:

- 1 second = 1000 ms.

- Previous time taken per operation = 23 seconds * 1000 ms = 23000 ms.

- New time taken per operation = 28 ms.

- Reduction in time = 99.87% ((old - new) / old * 100 = (23000 - 28) / 23000 * 100).

- Performance increase = 820.42% ((old - new) / new * 100 = (23000 - 28) / 28 * 100).

- Time savings = 821.42x ((old / new) = (23000 / 28)).

- Metrics:

- Gains from Elasticsearch improvements. There was a 36.5x improvement.

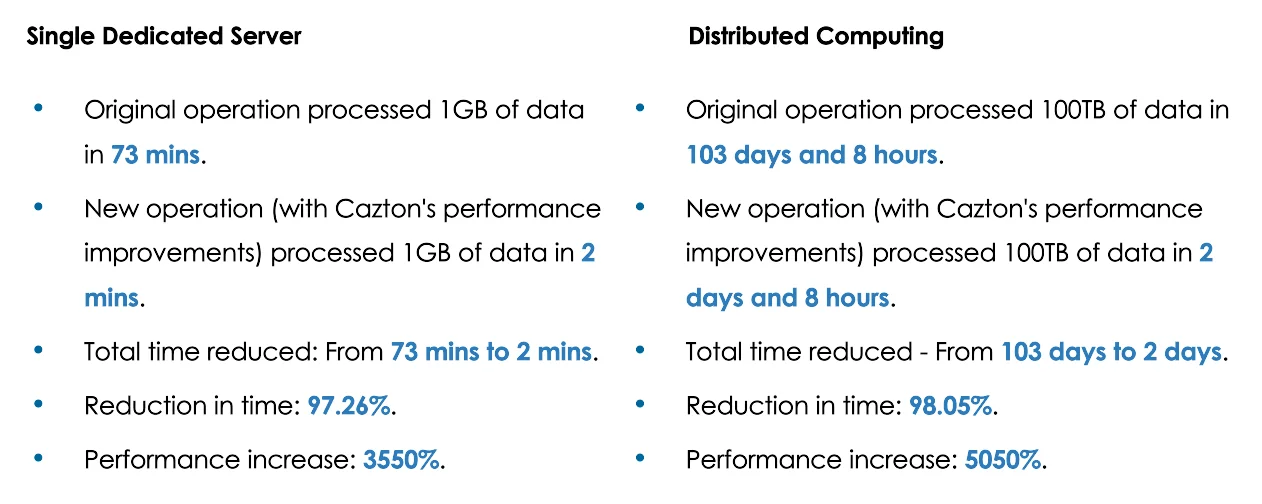

- Single dedicated server:

- Original operation - Took 73 mins to process 1 GB of data.

- New operation - Took 2 mins and 7 seconds to process 1 GB of data.

- Total time reduced - From 73 mins to 2 mins.

- Reduction in time - 97.26% ((old - new) / old * 100 = (73 - 2) / 73 * 100).

- Performance increase - 3550% ((old - new) / new * 100 = (73 - 2) / 2 * 100).

- Time savings - 36.5x.

- Distributed computing:

- Total data = 100 terabytes (100 TB) = 102,400 GB.

- Single Azure VM configuration - Standard_B8ms - 8 vCPUs - 32 GB RAM with additional managed standard HDD space.

- Each VM processes - 100 TB / 50 VMs => 102,400 GB / 50 VMs => 2,048 GB data.

- Extending our previous estimations:

- Original operation - 103 days 8 hours (1 GB in 73 mins, so 2,048 GB => (2048 * 73) mins => 1,49,504 mins => 2492 hours ~ 103 days 8 hours).

- New operation - 2 days 8 hours (1 GB in 2 mins, so 2,048 GB => (2048 * 2) mins => 4,096 mins => 68 hours ~ 2 days 8 hours).

- Single dedicated server:

Cazton is composed of technical professionals with expertise gained all over the world and in all fields of the tech industry and we put this expertise to work for you. We serve all industries, including banking, finance, legal services, life sciences & healthcare, technology, media, and the public sector. Check out some of our services:

- Artificial Intelligence

- Big Data

- Web Development

- Mobile Development

- Desktop Development

- API Development

- Database Development

- Cloud

- DevOps

- Enterprise Search

- Blockchain

- Enterprise Architecture

Cazton has expanded into a global company, servicing clients not only across the United States, but in Oslo, Norway; Stockholm, Sweden; London, England; Berlin, Germany; Frankfurt, Germany; Paris, France; Amsterdam, Netherlands; Brussels, Belgium; Rome, Italy; Sydney, Melbourne, Australia; Quebec City, Toronto Vancouver, Montreal, Ottawa, Calgary, Edmonton, Victoria, and Winnipeg as well. In the United States, we provide our consulting and training services across various cities like Austin, Dallas, Houston, New York, New Jersey, Irvine, Los Angeles, Denver, Boulder, Charlotte, Atlanta, Orlando, Miami, San Antonio, San Diego, San Francisco, San Jose, Stamford and others. Contact us today to learn more about what our experts can do for you.