Semantic Kernel Consulting

- AI Orchestration: Semantic Kernel seamlessly connects LLMs, structured data, APIs, and automation tools, enabling AI-driven workflows that are scalable, context-aware, and efficient for enterprise applications.

- Enterprise-Grade Scalability: Built for high-performance AI, Semantic Kernel supports asynchronous execution, intelligent task management, and vector indexing, ensuring fast and efficient AI responses at scale.

- Multi-Agent Collaboration: AI agents in Semantic Kernel can autonomously plan, execute, and optimize workflows, enabling businesses to streamline decision-making and automate complex tasks with minimal manual effort.

- Semantic Kernel vs LangChain: Discover how Semantic Kernel and LangChain compare in AI orchestration, model compatibility, workflow automation, and enterprise integration to find the right fit for your needs.

- End-to-End Implementation: From strategy to execution, we handle the entire AI journey, including data preparation, model training, deployment, and optimization. We build full-scale enterprise applications and custom AI agents on all major cloud platforms, ensuring seamless integration into your existing systems.

- Fully Customized, Fine Tuned AI Solutions: We design fully tailored AI solutions that are finely tuned to your business domain, optimizing decision-making, automating workflows, and boosting customer engagement based on your unique needs and use cases.

- Team of experts: Our team is composed of PhD and Master's-level experts in AI, data science and machine learning, award-winning Microsoft AI MVPs, open-source contributors, and seasoned industry professionals with years of hands-on experience.

- Microsoft and Cazton: We work closely with OpenAI, Azure OpenAI and many other Microsoft teams. We are fortunate to have been working on LLMs since 2020, a couple years before ChatGPT was launched. We are grateful to have early access to critical technologies from Microsoft, Google and open-source vendors.

- Top clients: We help Fortune 500, large, mid-size and startup companies with Big Data and AI development, deployment (MLOps), consulting, recruiting services and hands-on training services. Our clients include Microsoft, Google, Broadcom, Thomson Reuters, Bank of America, Macquarie, Dell and more.

Artificial Intelligence (AI) is transforming industries by enabling automation, enhancing search capabilities, and optimizing decision-making through advanced language models. However, effectively integrating AI into enterprise systems requires more than just model performance – it demands robust orchestration, efficient data retrieval, and seamless interaction with business applications. Semantic Kernel addresses these challenges by providing a flexible framework that connects large language models with external APIs, databases, and workflow automation tools, enabling businesses to build scalable, AI-driven solutions.

What is Semantic Kernel?

Semantic Kernel is an open-source framework designed to bridge the gap between AI models and enterprise applications, allowing businesses to build intelligent, context-aware solutions that go beyond simple text generation. Unlike traditional AI implementations, which often operate in isolated environments, Semantic Kernel enables seamless interaction between LLMs, structured data, external APIs, and workflow automation tools.

At its core, Semantic Kernel provides:

- AI Orchestration: Enables seamless interaction between LLMs, structured databases, APIs, and automation tools, enhancing enterprise AI capabilities.

- Memory & Context Retention: Supports long-term memory, vector-based retrieval, and knowledge persistence, ensuring accurate and context-aware AI responses.

- Multi-Agent Planning & Execution: AI agents can collaborate on complex tasks, breaking them down into structured workflows.

- Flexible Integration: Connects with Microsoft Graph, OpenAI, Azure AI, and other enterprise platforms, ensuring smooth AI adoption across cloud and on-prem environments.

- Enterprise-Grade Scalability: Designed for high-performance AI applications with asynchronous execution, vector indexing, and fine-tuned retrieval mechanisms.

By combining retrieval-augmented generation (RAG), tool invocation (function calling), and enterprise data integration, Semantic Kernel enables businesses to scale AI capabilities efficiently while ensuring seamless integration with existing infrastructure. Whether enhancing search accuracy, automating document intelligence, or optimizing AI-powered workflows, Semantic Kernel provides the foundation for building scalable, production-ready AI solutions.

Semantic Kernel Components

Semantic Kernel is designed as a modular AI orchestration framework, enabling seamless integration between large language models (LLMs), external data sources, and enterprise applications. It is built around several key components, each responsible for a critical aspect of AI execution, memory management, planning, connectivity, and extensibility. These components work together to enhance automation, decision-making, and contextual awareness, making AI systems more adaptive and efficient.

- Kernel: The Kernel serves as the central execution engine that manages plugin execution, function calls, and workflow orchestration in AI-powered applications.

- Plugin Registration: Enables seamless integration of both AI-powered and native functions, allowing the Kernel to invoke them dynamically.

- AI Function Execution: Processes user queries, external data sources, and automated tasks, triggering the right AI function at the right time.

- Workflow Orchestration: Manages the execution flow of AI tasks, ensuring structured, optimized processing across different modules.

- Logging, Telemetry & Debugging: Provides real-time insights and monitoring tools, enabling developers to track and fine-tune AI processes efficiently.

- AI Service Connectors: AI Service Connectors provide an abstraction layer that allows Semantic Kernel to communicate with multiple AI providers through a common interface. These connectors enable:

- Chat Completion & Text Generation: Supports natural language interactions, AI-powered assistants, and text-based decision-making.

- Text-to-Image & Image-to-Text: Integrates multimodal AI capabilities, allowing text and image processing in real-time applications.

- Text-to-Audio & Audio-to-Text: Enables speech recognition, automated transcription, and voice-based interactions.

- Embedding Generation: Converts text into vector embeddings, powering semantic search, recommendations, and Retrieval-Augmented Generation (RAG).

By default, when an AI service is registered, Chat Completion or Text Generation services are automatically used for AI-related operations. Other services (e.g., embeddings, multimodal processing) require explicit configuration.

- Memories: Semantic Kernel includes a persistent memory system that enhances context retention and response accuracy. It supports multiple storage mechanisms:

- Key-Value Pairs: Stores structured data for quick retrieval, allowing AI models to reference previously stored information efficiently.

- Local Storage: Retains memory across user sessions, ensuring continuity in long-running conversations or workflows.

- Semantic In-Memory Search: Uses vector embeddings for intelligent information retrieval, improving context awareness and precision in search results.

- Vector Store (Memory) Connectors: Semantic Kernel's Vector Store Connectors provide a standardized interface to interact with vector databases, storing and retrieving high-dimensional embeddings for AI-powered search and retrieval.

- Memory Persistence: Allows AI models to retain and recall contextual knowledge, improving response quality and accuracy.

- Intelligent Search: Exposes vector search as a plugin, enabling AI-driven retrieval in chat interactions, RAG pipelines, and decision-making applications.

- Supported Vector Stores: Connects with Azure Cosmos DB, Azure AI Search, MongoDB Atlas with Vector Search, Postgres with pgvector, Pinecone, Qdrant, FAISS, Redis, Elasticsearch and more.

- Unlike AI Service Connectors, vector stores are not automatically used by the Kernel. Developers must explicitly register and configure them within their applications.

- Planners: Planners dynamically generate and execute task sequences based on user input, optimizing workflow automation and decision-making. Different planners offer varying levels of complexity:

- SequentialPlanner: Creates multi-step execution plans, ensuring logical sequencing of AI-driven tasks in structured workflows.

- BasicPlanner: Provides a lightweight, low-overhead method for simple task chaining and rule-based automation.

- ActionPlanner: Executes single AI functions in real time, making it ideal for direct API calls and command-response scenarios.

- StepwisePlanner: Dynamically refines execution plans step by step, adjusting actions based on real-time feedback and evolving business requirements.

- Functions & Plugins: Plugins are named function containers that extend AI capabilities, allowing the Kernel to execute custom business logic, API calls, and AI-driven operations.

- Semantic Functions: Uses LLM-powered prompts to execute complex AI-driven tasks via natural language commands.

- Native Functions: Supports direct execution of C#, Python, and Java-based operations, enabling deep integration with enterprise logic.

- OpenAPI Specifications: Wraps external APIs as callable functions, allowing AI-driven systems to trigger external workflows dynamically.

- Text Search for RAG Workflows: Enables AI models to query structured databases, enhancing contextual retrieval and response accuracy.

- By advertising functions to the AI, plugins allow autonomous decision-making and real-time data integration, making applications more adaptive and context aware.

- Prompt Templates: Prompt Templates allow developers to create dynamic, structured AI interactions by mixing contextual instructions, user inputs, and function outputs to guide AI behavior.

- Contextual Instructions: Provide AI with guidance on task execution, improving consistency and response quality.

- User Inputs: Allow AI models to process real-time data, adapting dynamically to user needs.

- Function Outputs: Integrate plugin-based logic, ensuring AI responses are grounded in business rules and external knowledge.

- Prompt Templates support two main use cases:

- Chat Completion Starter: Defines structured prompts for AI-generated responses.

- Plugin Functions: Allows AI models to dynamically invoke prompt-based logic during execution.

- Templates can also be nested, allowing complex multi-step workflows where AI models call other prompts, retrieve data, and refine responses dynamically.

- Filters:

- Filters allow developers to intercept and modify AI execution at critical stages, ensuring better security, compliance, and accuracy. They operate before and after specific events in the chat completion flow, such as function invocation and prompt rendering.

- Before a function executes, filters can validate input, enforce API security, and transform outputs. Similarly, before and after prompt rendering, they enable context adjustments, compliance tracking, and result refinement. Since prompt templates are always converted into Kernel Functions before execution, both function and prompt filters are applied in sequence. Function filters wrap around AI function execution, while prompt filters modify AI-generated text before it's processed further.

- By leveraging filters, enterprises can enforce security policies, improve AI accuracy, and maintain compliance across automated workflows.

- Key Use Cases:

- Security & Compliance: Ensures AI interactions adhere to strict regulatory policies, preventing sensitive data exposure.

- Result Validation: Allows business logic enforcement, ensuring AI outputs meet predefined quality and accuracy standards.

- Logging & Auditing: Provides traceability for AI-powered processes, enabling developers to track execution flows in real time.

Semantic Kernel and Agent Framework

Semantic Kernel’s Agent Framework provides a structured way to build AI agents that operate autonomously or semi-autonomously, making real-time decisions, retrieving data, and interacting with users, APIs, and enterprise systems. Unlike traditional AI models that function as static tools, AI agents dynamically respond to changing conditions, optimizing workflows and reducing manual intervention.

- Core Capabilities of the AI Agent Framework

- Multi-Agent Collaboration: AI agents can communicate and work together, with specialized agents handling distinct tasks such as data retrieval, decision support, and workflow execution.

- Dynamic Function Invocation: Agents autonomously call APIs, query databases, and trigger external workflows in real time, eliminating the need for manual intervention.

- Hybrid RAG Optimization: Enhances AI decision-making by combining semantic search, structured data retrieval, and real-time information processing, ensuring accuracy and contextual relevance.

- Retrieval-Augmented Fine-Tuning (RAFT): Merges the precision of real-time retrieval with fine-tuning, allowing AI to specialize in high-stakes domains like finance, healthcare, and legal.

- Human-Agent Collaboration: Agents can integrate human feedback into workflows, ensuring human oversight in decision-making where required, such as compliance reviews or financial risk assessments.

- Process Orchestration: Agents coordinate different tasks across enterprise systems, tools, and APIs, automating end-to-end workflows like cloud orchestration, fraud detection, or document processing.

- Modular & Adaptable Architecture: Developers can create different types of agents for specific tasks (e.g., API integration, data scraping, content generation), making applications scalable and easier to maintain.

- When to Use AI Agents

- Autonomy and Decision-Making: Systems that need to make real-time decisions and adapt to changing conditions, such as fraud detection or intelligent document processing.

- Multi-Agent Collaboration: Complex workflows where different agents handle distinct tasks, such as risk assessment in financial services or supply chain automation.

- Interactive, Goal-Oriented AI: AI systems that interact with users to achieve specific objectives, such as virtual assistants, AI-driven customer support, or legal contract analysis.

- Real-World Applications of Multi-Agent AI

- Enterprise Workflow Automation: AI-driven document processing, compliance tracking, and intelligent resource allocation.

- AI-Powered Customer Support: AI assistants that triage customer inquiries, retrieve relevant data, and escalate cases to human agents when needed.

- Financial & Risk Analysis: AI agents that analyze financial data, detect fraud patterns, and optimize risk assessment processes.

- Healthcare AI Assistants: AI-powered medical transcription, automated patient interactions, and compliance-driven decision-making.

By leveraging Semantic Kernel’s AI Agent Framework, enterprises can build adaptive AI systems that automate decision-making, streamline operations, and enhance productivity across industries.

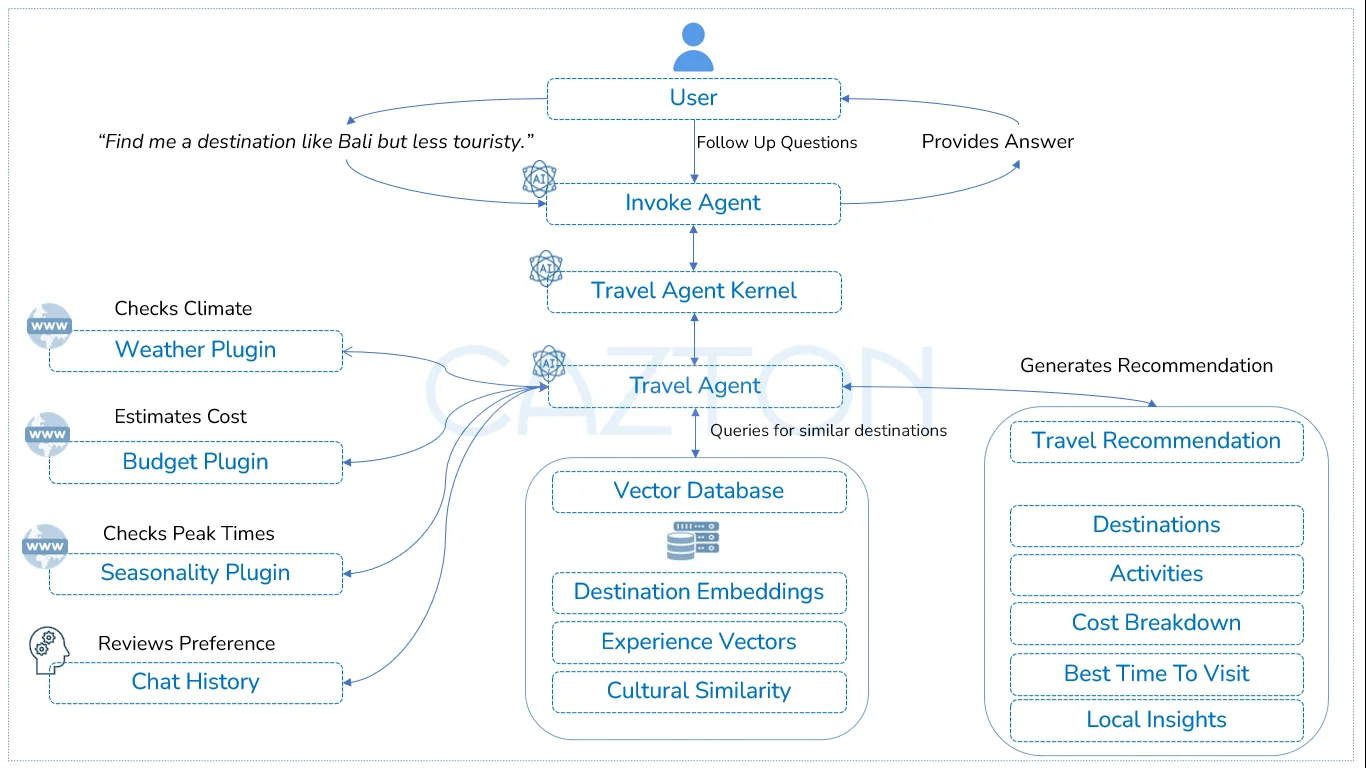

AI Agent based travel recommendation system using Semantic Kernel

Semantic Kernel and Process Framework

Semantic Kernel’s Process Framework enables organizations to design structured, AI-powered workflows that integrate intelligent decision-making with enterprise systems. This framework allows businesses to create modular, scalable, and auditable workflows that enhance automation, streamline operations, and maintain compliance.

- Core Concepts

- Process: A structured sequence of steps designed to achieve a business goal, such as account onboarding, fraud detection, or customer service automation.

- Step: An individual task within a process, responsible for specific inputs and outputs. Steps can interact with AI models, business applications, or external APIs.

- Pattern: The sequence type that dictates how steps are executed, ensuring processes follow a logical and efficient order.

- Key Features of the Process Framework

- Semantic Kernel Integration: Each step can invoke one or multiple Kernel Functions, embedding AI-driven decision-making directly into enterprise workflows.

- Reusable & Modular Design: Steps and processes can be reused across different applications, improving maintainability and accelerating AI-driven automation.

- Event-Driven Architecture: Uses events and metadata to dynamically trigger transitions between steps, ensuring real-time responsiveness.

- Full Control & Auditability: Provides built-in process tracking with Open Telemetry, allowing organizations to monitor AI-driven decisions for security and compliance.

- Scalable Execution Patterns: Supports flexible execution patterns, enabling businesses to define process flows based on complexity, dependencies, and decision criteria.

- Real-World Applications of the Process Framework

- Account Opening: AI automates credit checks, fraud detection, and customer onboarding, integrating with enterprise systems for seamless customer registration.

- Food Delivery: AI streamlines order processing, kitchen workflows, driver assignments, and real-time customer updates, improving efficiency and delivery times.

- Support Ticket Management: AI-powered ticket triaging, dynamic routing, and resolution tracking enhance customer support operations and response efficiency.

By leveraging the Process Framework, businesses can transform traditional workflows into intelligent, AI-driven processes that scale effortlessly while ensuring accuracy, compliance, and operational efficiency.

Semantic Kernel vs. LangChain

As AI adoption grows, developers and enterprises need robust frameworks to integrate large language models (LLMs) into real-world applications. Semantic Kernel and LangChain are two popular AI orchestration frameworks designed to enhance AI capabilities. While both offer tools for building intelligent applications, they differ in performance, language support, model compatibility, and use cases. Below is a structured comparison to help you choose the right framework for your needs. For a deep dive into LangChain, check out our detailed article here.

- Performance & Efficiency:

- Semantic Kernel: Optimized for asynchronous execution, reducing latency in AI-driven workflows. Efficient memory management improves response times in enterprise applications.

- LangChain: Offers ease of use but may have higher latency due to synchronous execution in certain pipelines, especially when handling complex chains.

- Language Support:

- Semantic Kernel: Primarily supports Java, C# and Python, making it ideal for enterprise applications and .NET ecosystems.

- LangChain: Built mainly for Python and JavaScript, offering greater flexibility for web and data science applications.

- Model Compatibility:

- Semantic Kernel: Natively integrates with OpenAI, Azure OpenAI, Google Gemini, and Hugging Face models, with strong support for enterprise AI solutions.

- LangChain: Supports a wider range of models, including OpenAI, Azure OpenAI, Google Gemini, Cohere, Anthropic Claude, Hugging Face, and self-hosted LLMs.

- AI Orchestration & Workflow Automation:

- Semantic Kernel: Offers Planners and AI Agents that autonomously execute multi-step tasks and interact with external APIs.

- LangChain: Uses Agents and Chains to handle complex reasoning tasks but lacks built-in multi-agent collaboration found in SK.

- Features & Extensibility:

- Semantic Kernel: Provides Memory, Function Calling, Prompt Templates, AI Service Connectors, and Vector Store Integration for modular AI development.

- LangChain: Offers Retrieval-Augmented Generation (RAG), Chains, Memory, and Tools, making it well-suited for quick prototyping and research applications.

- Memory & Context Retention:

- Semantic Kernel: Uses persistent memory and vector-based retrieval, allowing AI models to retain and recall long-term context efficiently.

- LangChain: Provides short-term memory (session-based) and vector store memory but managing long-term context persistence requires additional customization.

- Enterprise Integration:

- Semantic Kernel: Designed for seamless integration with enterprise systems, including Microsoft Graph, Azure services, and on-prem solutions.

- LangChain: Primarily focused on cloud-based AI applications, with integrations for APIs, databases, and vector stores but less emphasis on enterprise environments.

- Developer Ecosystem & Community:

- Semantic Kernel: Backed by Microsoft, with growing adoption in enterprise AI and strong .NET developer support.

- LangChain: Has a larger open-source community, making it easier to find third-party integrations and experimental AI tools.

Challenges with Semantic Kernel

- Retrieval Inefficiencies & Latency

- Challenge: AI-powered applications often struggle with slow response times and inaccurate retrieval when working with large datasets. Poor indexing strategies and inefficient retrieval mechanisms can lead to high latency and irrelevant results, reducing AI accuracy and usability.

- Solution:

- Hybrid retrieval (semantic + keyword-based search) improves accuracy by allowing AI to search both vector embeddings and structured databases.

- Optimized vector indexing in Azure AI Search, Pinecone, FAISS, and pgvector ensures faster query execution.

- Hierarchical document chunking segments large documents into retrievable pieces, improving search precision and reducing query processing time.

- Business Impact: By optimizing retrieval efficiency, enterprises experience faster AI response times, reduced latency, and improved answer accuracy, making AI-driven search applications more effective for real-world business use cases.

- AI Model Accuracy & Context Retention

- Challenge: Generic LLMs often fail to retain business-specific context, leading to irrelevant, hallucinated, or incomplete responses. Without proper memory mechanisms, AI assistants may struggle with long-term context awareness, making them unsuitable for financial, healthcare, or legal applications that require high accuracy and compliance.

- Solution:

- Fine-tuned domain-specific LLMs ensure AI understands industry-specific terminology and regulations.

- Memory-optimized retrieval (RAG + persistent storage) enables AI to retain context across multiple interactions.

- Multi-turn memory storage using Redis, PostgreSQL, and vector databases improves long-term conversational AI performance.

- Business Impact: AI applications deliver accurate, context-aware responses, reducing errors, misinformation, and user frustration while improving decision-making in enterprise environments.

- AI Workflow Scalability & Execution Bottlenecks

- Challenge: As AI-powered applications grow in complexity, execution bottlenecks can slow down multi-agent AI workflows. Inefficient task coordination and synchronous execution models can lead to delays in processing requests, redundant operations, and increased computational costs.

- Solution:

- Dependency-aware execution graphs optimize task scheduling and AI reasoning, reducing redundant processing.

- Parallel and asynchronous execution improves workflow speeds, allowing AI agents to handle multiple requests efficiently.

- Persistent session storage (Redis, PostgreSQL) ensures seamless multi-user interactions, even in high-concurrency environments.

- Business Impact: AI-driven workflows become faster, more efficient, and cost-effective, enabling enterprises to scale AI deployments without performance degradation.

- Security & Compliance Risks

- Challenge: AI applications processing sensitive data (financial, legal, healthcare) must adhere to strict security and regulatory standards such as HIPAA, GDPR, and SEC compliance. Without proper safeguards, data leaks, unauthorized access, and compliance violations can occur, exposing enterprises to legal and financial risks.

- Solution:

- Role-based access controls (RBAC) ensure only authorized users can access specific AI functionalities.

- Audit logging & traceability provide detailed records of AI interactions to support regulatory audits and compliance checks.

- Private LLM deployment (Azure OpenAI, on-prem models) ensures secure AI execution in enterprise-controlled environments.

- Business Impact: Enterprises reduce compliance risks, enhance data security, and maintain control over AI-driven operations, ensuring AI applications meet legal and regulatory requirements.

How Cazton Can Help You With Semantic Kernel

Cazton helps enterprises unlock the full potential of Semantic Kernel by delivering AI-driven solutions that enhance automation, decision-making, and enterprise integration. Our team is composed of PhD and Master's-level experts in AI, data science and machine learning, award-winning Microsoft AI MVPs, open-source contributors, and seasoned industry professionals with years of hands-on experience.

We specialize in designing tailored AI solutions that integrate seamlessly with enterprise applications, databases, and cloud platforms, ensuring real-world efficiency and business impact.

Key Services We Offer

- AI Architecture & Strategy: Define AI roadmaps and system design for scalable Semantic Kernel-powered solutions.

- Custom AI Application Development: Build enterprise AI solutions, including intelligent assistants, automated workflows, and decision-support systems.

- Retrieval-Augmented Generation (RAG) Implementation: Optimize AI search capabilities with advanced retrieval techniques, vector databases, and memory management.

- AI Agent Development: Design multi-agent AI systems for automating complex workflows, API interactions, and real-time decision-making.

- Enterprise Integration Services: Connect Semantic Kernel with Microsoft Graph, Azure AI, OpenAI, external APIs, and business applications.

- Performance & Scalability Optimization: Improve AI latency, vector retrieval speed, and workload distribution for large-scale deployments.

- Security & Compliance Solutions: Implement role-based access, data protection strategies, and compliance with HIPAA, GDPR, and industry standards.

- AI DevOps & MLOps Solutions: Automate model versioning, monitoring, logging, and continuous AI model updates.

- Training & Knowledge Transfer: Offer developer training, architecture workshops, and best practices sessions for AI-powered applications.

- Innovation & Research Consulting: Develop proof-of-concept (POC) solutions in just weeks on your own data, prototype AI use cases, and drive AI innovation in enterprises.

At Cazton, we don’t just integrate AI - we help businesses transform AI into a strategic advantage. Our hands-on expertise ensures you get scalable, production-ready AI solutions tailored to your industry needs. Our clients trust us to consistently deliver complex projects within tight deadlines.

We have not only saved them millions of dollars but also demonstrated time and again our ability to exceed expectations, providing innovative solutions and unparalleled value. Let us help you turn your challenges into opportunities and your vision into results. Reach out today, and let's make it happen together!

Cazton is composed of technical professionals with expertise gained all over the world and in all fields of the tech industry and we put this expertise to work for you. We serve all industries, including banking, finance, legal services, life sciences & healthcare, technology, media, and the public sector. Check out some of our services:

- Artificial Intelligence

- Big Data

- Web Development

- Mobile Development

- Desktop Development

- API Development

- Database Development

- Cloud

- DevOps

- Enterprise Search

- Blockchain

- Enterprise Architecture

Cazton has expanded into a global company, servicing clients not only across the United States, but in Oslo, Norway; Stockholm, Sweden; London, England; Berlin, Germany; Frankfurt, Germany; Paris, France; Amsterdam, Netherlands; Brussels, Belgium; Rome, Italy; Sydney, Melbourne, Australia; Quebec City, Toronto Vancouver, Montreal, Ottawa, Calgary, Edmonton, Victoria, and Winnipeg as well. In the United States, we provide our consulting and training services across various cities like Austin, Dallas, Houston, New York, New Jersey, Irvine, Los Angeles, Denver, Boulder, Charlotte, Atlanta, Orlando, Miami, San Antonio, San Diego, San Francisco, San Jose, Stamford and others. Contact us today to learn more about what our experts can do for you.