Evals Consulting

- From “hope” to hard numbers: Learn how the world’s first GPT-4 evaluation team converts AI hype into board-level ROI, you can prove.

- The executive blueprint: Discover the metrics, dashboards, and governance steps that link model accuracy to revenue, risk and strategic goals.

- Real-world proof points: See how finance, healthcare, manufacturing, retail and insurance clients used evals to cut fraud, improve care, slash downtime and lift customer lifetime value.

- Compliance turned competitive edge: Understand the bias, privacy and regulatory tests that keep regulators satisfied and customers trusting.

- What’s next in AI evals: Prepare for autonomous agents, continuous-learning systems and multi-agent ecosystems with action frameworks that drive nonstop improvement.

- Microsoft and Cazton: We work closely with OpenAI, Azure OpenAI and many other Microsoft teams. Thanks to Microsoft for providing us with very early access to critical technologies. We are fortunate to have been working on GPT-3 since 2020, a couple years before ChatGPT was launched.

- Top clients: We help Fortune 500, large, mid-size and startup companies with Big Data and AI development, deployment (MLOps), consulting, recruiting services and hands-on training services. Our clients include Microsoft, Google, Broadcom, Thomson Reuters, Bank of America, Macquarie, Dell and more.

Are you confident your AI initiatives are delivering the ROI your board expects? Can you demonstrate to stakeholders that your AI investments are creating measurable business value? Most executives struggle to answer these questions with certainty. While organizations rush to implement AI solutions, many lack systematic approaches to measure performance, validate outcomes, and ensure alignment with business objectives.

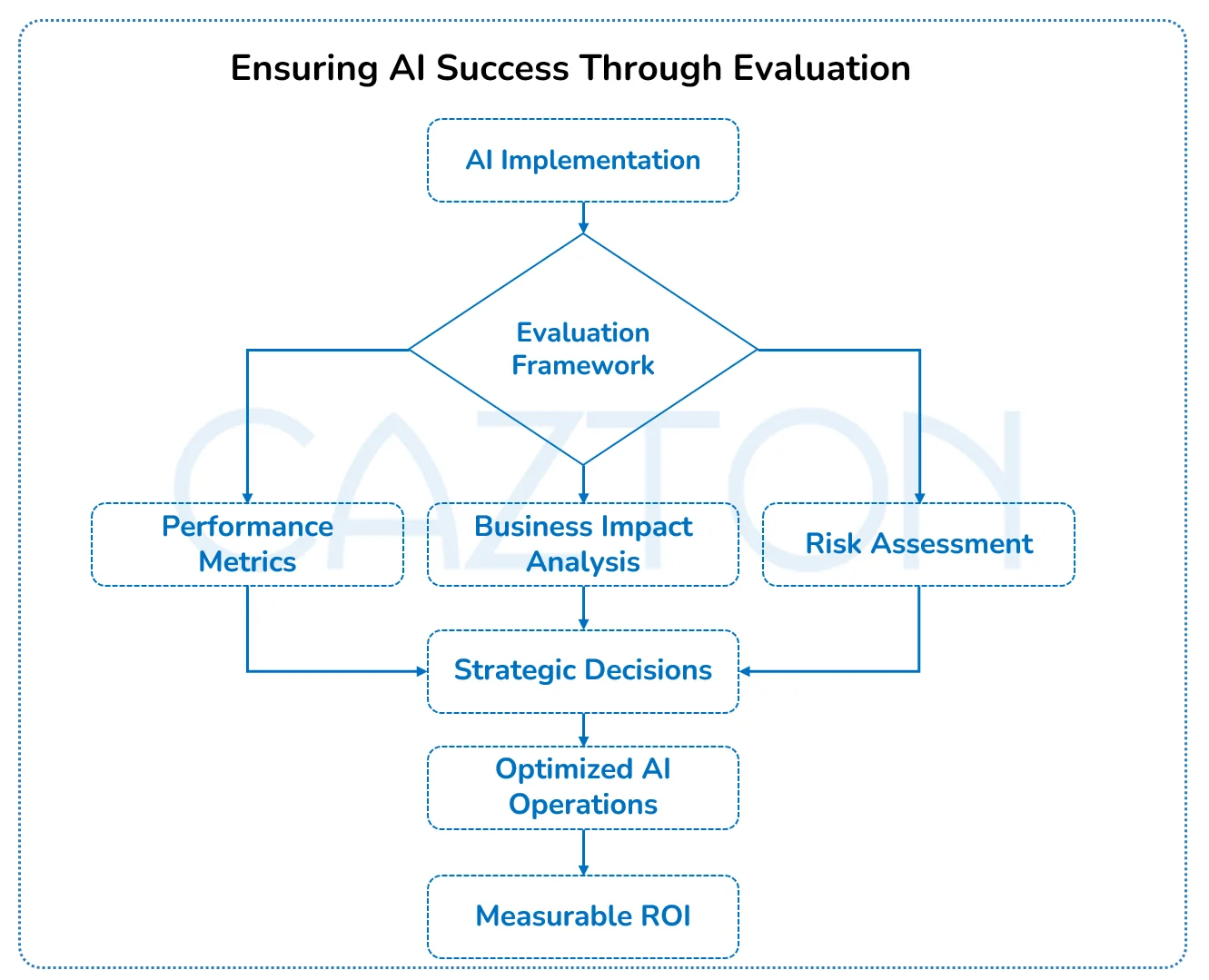

AI Evaluations represent a fundamental shift from hoping AI works to knowing it delivers results. These comprehensive assessment frameworks transform subjective impressions into objective metrics, providing the clarity executives need to make informed decisions about AI investments.

Our pioneering work in AI evaluation spans years of innovation and real-world applications. We were the first company globally to implement evaluations on GPT-4, months ahead of OpenAI’s own open-source contributions pioneering methodologies that have since become industry standards. This early expertise positions us uniquely to help your organization navigate the complexities of AI assessment with confidence and precision.

Our evaluation methodology incorporates both black-box and white-box testing approaches, leveraging techniques such as:

- Prompt engineering optimization with statistical validation

- Fine-grained performance analysis using confusion matrices and ROC curves

- Automated regression testing frameworks for continuous model monitoring

- Bayesian optimization for hyperparameter tuning in evaluation pipelines

What Makes AI Evaluation Different for Your Enterprise

Enterprise AI evaluation differs fundamentally from academic benchmarks. While academic evaluations focus on general capabilities, your business requires assessments aligned with specific business outcomes, industry regulations, and organizational values. We create evaluation frameworks that match your business goals and are built on strong technical foundations.

AI evaluation frameworks must address:

- Business alignment and ROI validation

- Regulatory compliance and governance requirements

- Ethical considerations specific to your industry

- Integration with existing enterprise systems

- Performance at enterprise scale and under real-world conditions

These enterprise-specific considerations require evaluation methodologies that go beyond standard technical metrics to include business impact measurements that matter to your stakeholders. Our approach transforms complex technical assessments into clear, actionable insights that drive strategic decision-making and demonstrate tangible value to your organization.

From a technical perspective, our enterprise evaluation frameworks implement:

- Multi-dimensional performance matrices that track both model accuracy and computational efficiency

- Custom evaluation harnesses that simulate production loads and edge cases

- Distributed evaluation pipelines that scale with your infrastructure

- Version control for evaluation datasets to ensure reproducibility and fair comparisons between model iterations

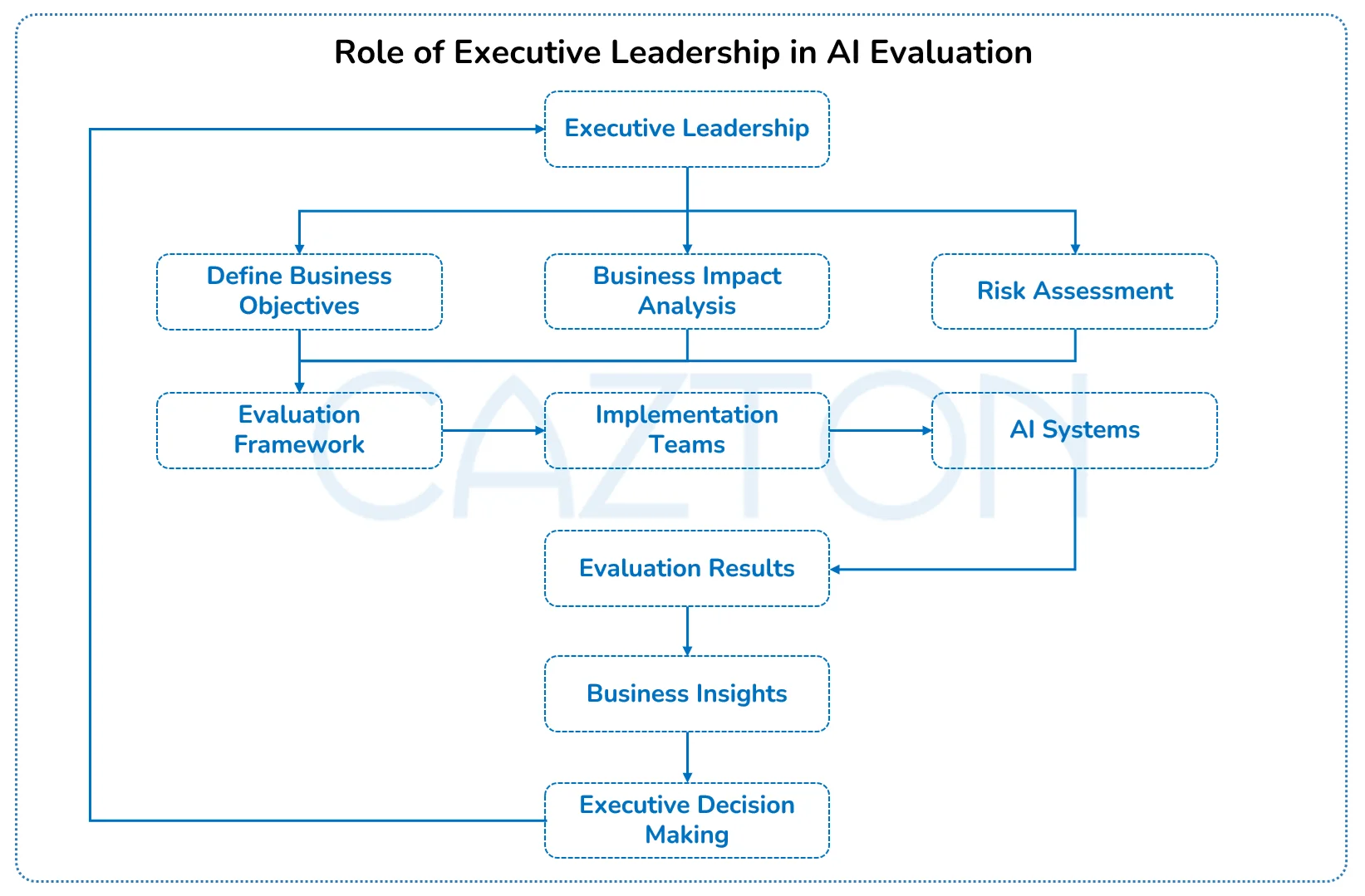

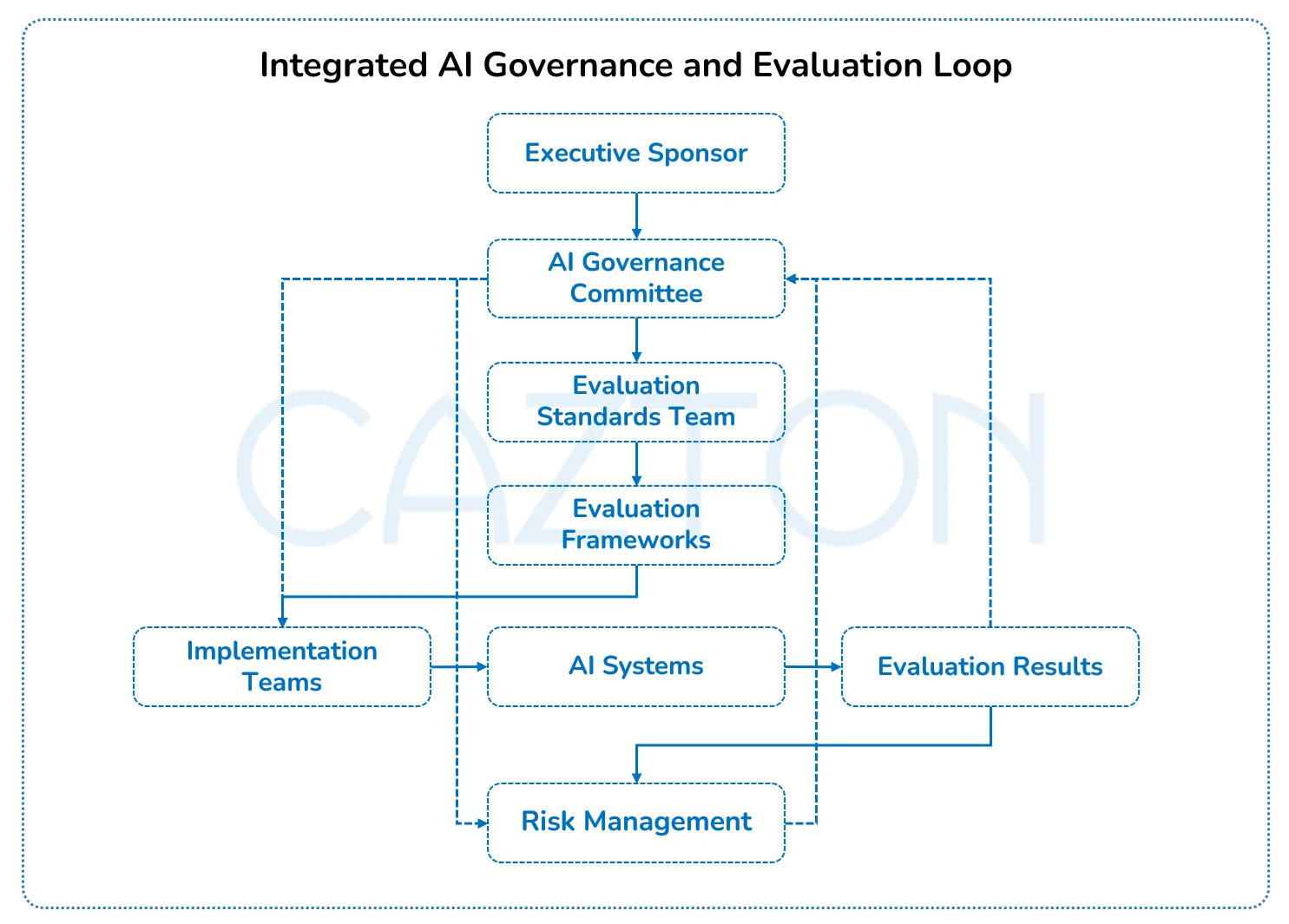

How Your Leadership Drives AI Evaluation Success

As an executive, your involvement in AI evaluation strategy is not optional; it is essential. You must establish evaluation standards that align with organizational goals, ensure accountability across teams, and create frameworks that translate technical performance into business language. When leadership actively participates in defining evaluation criteria, teams gain clarity on priorities and can better align technical implementations with business goals.

Your leadership role includes:

- Setting clear expectations for what success looks like

- Ensuring evaluation metrics align with strategic objectives

- Allocating appropriate resources for thorough evaluation

- Creating a culture that values measured outcomes over hype

- Making informed decisions based on evaluation results

Without executive leadership, AI evaluations risk becoming purely technical exercises with limited business impact. Your involvement ensures these assessments are aligned with strategic goals and drive real organizational change. By championing thoughtful evaluation practices, you help turn AI from a standalone initiative into a catalyst for measurable business transformation.

We work directly with executives to design evaluation frameworks that reflect your strategic priorities. Our approach ensures that every metric connects to the business outcomes you care about, from operational efficiency to customer satisfaction.

How AI Evals Directly Impact Your Business Outcomes

When properly implemented, AI evaluation frameworks create a direct line of sight between technology investments and business results. This connection allows you to make informed decisions about resource allocation, risk management, and strategic direction with confidence.

- Immediate business benefits:

- Identify which AI capabilities deliver the most business value

- Redirect resources from underperforming initiatives

- Reduce risk by catching issues before they impact customers

- Build stakeholder confidence through transparent reporting

- Create competitive advantage through continuous improvement

- Cross-functional impact: Consider how evaluation insights can transform decision-making across your organization:

- Marketing teams can understand which AI-driven customer experiences increase conversion rates and customer lifetime value.

- Operations can quantify productivity improvements from AI automation and identify further optimization opportunities.

- Risk management can verify that AI systems comply with regulatory requirements.

- Product development can prioritize AI features that customers value.

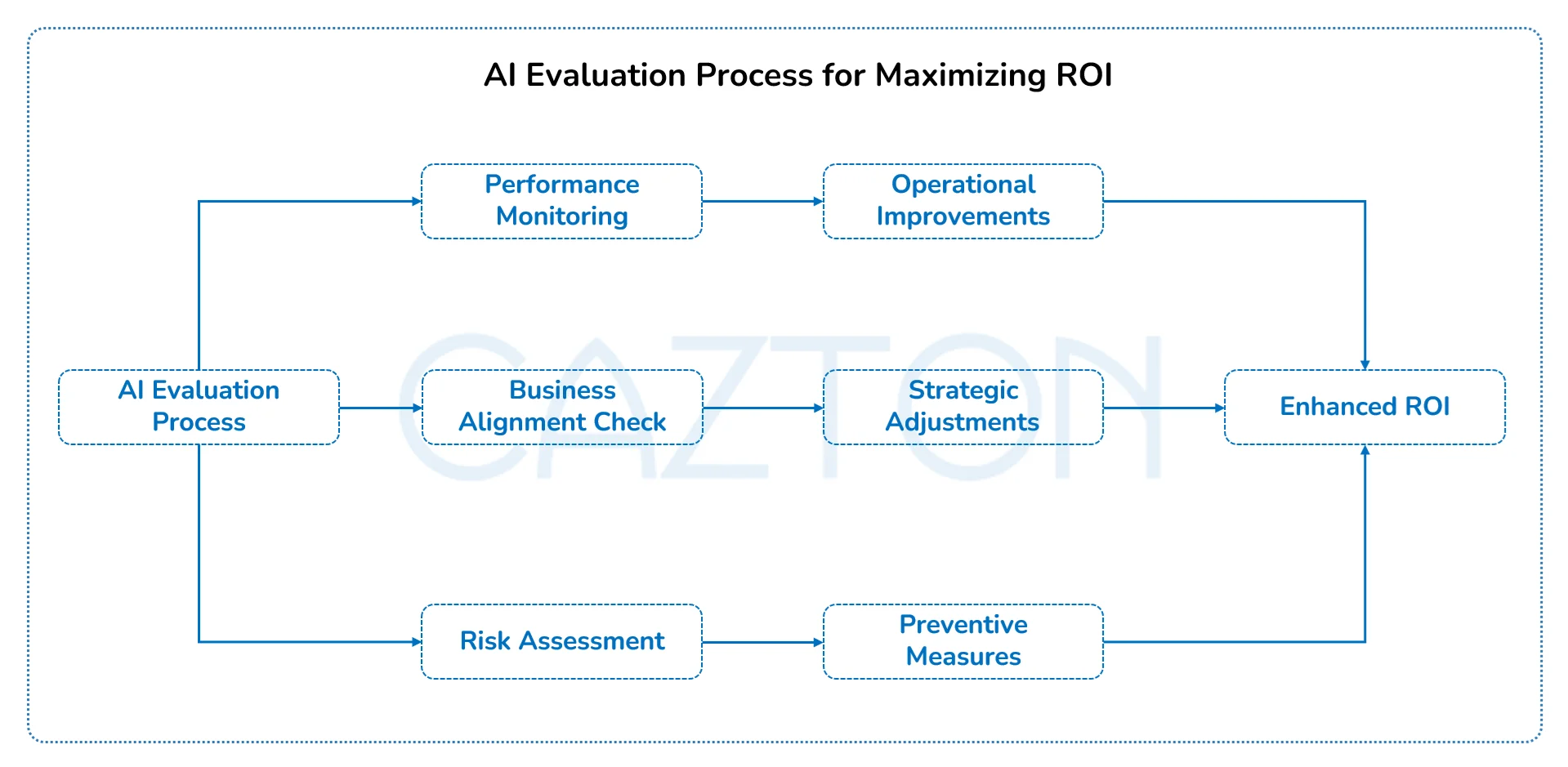

- Operational excellence: AI evaluations identify bottlenecks, inefficiencies, and optimization opportunities within your AI systems. This leads to improved processing speeds, reduced costs, and enhanced resource utilization across your technology stack.

- Risk mitigation: Systematic evaluation reveals potential failures before they impact operations. You gain visibility into model drift, data quality issues, and performance degradation that could otherwise cause significant business disruption or compliance violations.

- Strategic alignment: Regular assessments ensure AI initiatives continue supporting your business objectives as markets evolve and priorities shift, maintaining relevance and maximizing return on investment.

By establishing this connection between evaluation and outcomes, you ensure that AI investments serve business goals rather than technology for its own sake. We help you design evaluation frameworks that translate technical performance into business language, creating clear pathways from AI capabilities to measurable business impact. Our methodologies ensure that every evaluation metric connects directly to outcomes that matter to your stakeholders, from board members to operational teams.

Technical implementation example:

- For a financial services client, we implemented a multi-stage evaluation pipeline that:

- Captured ground truth data through a shadow deployment architecture

- Applied statistical significance testing to validate improvements

- Implemented A/B testing frameworks with proper experimental controls

- Created automated feedback loops that triggered retraining when performance drifted beyond thresholds

- Generated Git-integrated reports that tied model versions to business KPIs

This technical approach enabled precise attribution of $4.2M in fraud reduction to specific model improvements.

What You Need to Know About the Metrics That Matter

Different stakeholders require different evaluation metrics. As a senior leader, you need a balanced view that includes both technical performance and business impact measures. We help you design comprehensive metric frameworks that provide the right information to the right people at the right time, ensuring that technical teams understand business priorities while executives gain confidence in AI performance.

Key metric categories include:

Business impact metrics:

- Revenue influence through improved customer acquisition and retention

- Cost reduction via automation and process optimization

- Customer satisfaction improvement measured through engagement and loyalty

- Time-to-market acceleration for new products and services

- Risk mitigation effectiveness across operational and compliance areas

- Market share growth through AI-enabled competitive advantages

- Employee productivity gains from AI-assisted workflows

Technical performance metrics:

- Accuracy and precision across diverse use cases and data sets

- Latency and throughput under varying load conditions

- Robustness to real-world inputs and edge cases

- Fairness across user segments and demographic groups

- Security resilience against adversarial attacks and data breaches

- Model interpretability and explainability for critical decisions

- Data quality impact on system performance

Operational metrics:

- Integration effectiveness with existing enterprise systems

- Maintenance requirements and ongoing operational overhead

- Scalability under load without proportional cost increases

- Dependency on specialized skills and training requirements

- Total cost of ownership including infrastructure and support

- Deployment success rates and rollback capabilities

- User adoption rates and satisfaction with AI-assisted processes

Strategic Alignment Metrics:

- Contribution to key business objectives and strategic initiatives

- Innovation pipeline enhancement through AI capabilities

- Competitive positioning improvements

- Regulatory compliance maintenance and audit readiness

- Stakeholder confidence levels and communication effectiveness

By focusing on this balanced scorecard approach, you can evaluate AI systems holistically rather than being limited to technical metrics alone. We work with your leadership team to establish metric hierarchies that cascade from strategic objectives down to operational measures, ensuring that every evaluation activity supports your broader business goals.

Our approach includes creating executive dashboards that translate complex technical metrics into clear business insights, enabling confident decision-making at every organizational level.

Engineering-specific metrics to consider:

| Metric Category | Example Metrics | Implementation Method |

| Model Robustness | Adversarial accuracy, perturbation sensitivity | FGSM attacks, boundary testing |

| Inference Performance | p95 latency, throughput under load | Distributed load testing with Locust |

| Drift Detection | KL divergence, PSI scores | Statistical monitoring with Evidently AI |

| Resource Utilization | GPU memory efficiency, token optimization | Profiling with PyTorch Profiler |

| Reliability | Error rates, recovery time | Chaos engineering with fault injection |

These metrics can be implemented using open-source frameworks like MLflow, Weights & Biases, and custom monitoring solutions built on Prometheus and Grafana.

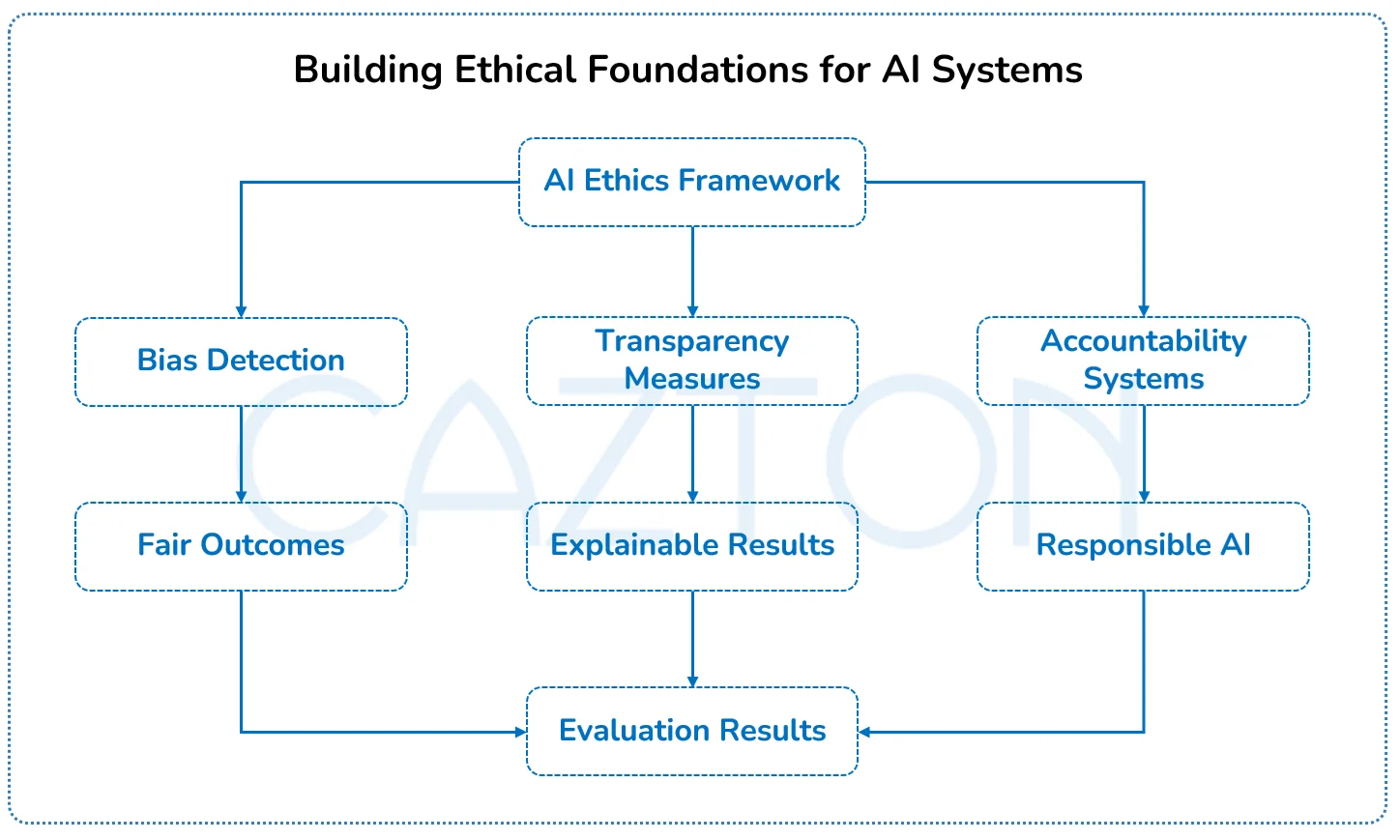

We Remove the Guesswork from AI Compliance

As AI becomes more prevalent in critical business functions, regulatory scrutiny continues to increase. Your evaluation framework must incorporate compliance and ethical considerations to protect your organization from regulatory penalties and reputational damage while demonstrating responsible AI practices to regulators, customers, and stakeholders.

We help you establish evaluation criteria that assess fairness, transparency, and accountability in your AI systems. This includes bias detection, explainability assessments, and compliance validation against industry standards.

Key regulatory and ethical evaluation areas include:

- Data privacy compliance (GDPR, CCPA, HIPAA)

- Model transparency and explainability

- Bias detection and mitigation

- Security vulnerability assessment

- Appropriate human oversight mechanisms

By integrating these considerations into your evaluation framework, you transform compliance from a cost center into a competitive advantage building trust with customers and regulators while reducing organizational risk.

Technical Implementation for Compliance Evaluation:

Our compliance evaluation frameworks include:

- Automated fairness testing using techniques like Counterfactual Fairness and Equal Opportunity Difference

- Model interpretability analysis using SHAP values and integrated gradients

- Privacy risk quantification through membership inference attack simulations

- Formal verification of model properties using SMT solvers where applicable

- Automated documentation generation for regulatory submissions

These techniques transform abstract compliance requirements into concrete, testable properties that engineering teams can systematically verify.

What Your Board Needs to See from Your AI Strategy

AI has evolved from a technical experiment to a strategic imperative demanding C-suite attention. Organizations that treat AI as a peripheral initiative risk falling behind competitors who recognize its transformative potential for driving growth, operational excellence, and market differentiation.

Board-level oversight is now essential. Directors expect leadership teams to demonstrate measurable progress beyond pilot programs, supported by robust governance frameworks and clear strategic vision. Meeting these expectations requires an AI evaluation system that delivers executive-ready insights across six critical dimensions:

To meet those expectations, your AI evaluation framework must produce concise, executive-friendly dashboards that highlight:

- Strategic impact: How AI initiatives strengthen market differentiation and support long-term business goals.

- Financial performance: Clear, quantifiable links between AI investments and revenue growth, margin improvement, or cost savings.

- Risk management: Identification and mitigation of emerging AI risks such as legal, ethical, operational, and reputational challenges.

- Competitive benchmarking: How your AI capabilities compare against industry peers and disruptors.

- Talent strategy: Plans to attract, develop, and retain AI talent across functions.

- Future readiness: Your organization’s ability to scale AI solutions and respond to new opportunities with agility.

Elevating AI evaluation to the boardroom creates accountability for both innovation and responsible implementation. This approach ensures AI delivers measurable business value while maintaining ethical standards and sustainable practices that protect long-term organizational success.

The question is no longer whether to invest in AI, but how quickly and effectively your organization can realize its potential while managing its risks.

Strengthening Accountability Through Governance and Ownership

Effective AI evaluation depends on clear ownership, structured governance, and consistent accountability. Without defined roles and responsibilities, evaluation efforts risk becoming an afterthought rather than a core part of your AI development process.

We help organizations build governance frameworks that embed evaluation throughout the AI lifecycle:

- Forming cross-functional evaluation teams that combine technical and business perspectives

- Defining specific roles and responsibilities for each phase of evaluation

- Establishing standardized protocols to ensure consistency and objectivity

- Implementing regular review cycles to assess performance and impact

- Creating remediation processes to address issues and drive continuous improvement

With the right governance in place, evaluation moves from a reactive task to a proactive discipline that shapes strategy, guides development, and strengthens trust in AI outcomes.

Case Studies

Financial Services

- Problem: A financial institution implemented AI-powered fraud detection but struggled to determine if the system was actually reducing fraud while maintaining customer experience. The organization lacked visibility into false positive rates, customer friction points, and the correlation between AI alerts and actual fraud prevention.

- Solution: We implemented a comprehensive evaluation framework that measured both technical accuracy and business outcomes. Our approach included designing custom metrics that tracked customer journey impact, establishing baseline comparisons for fraud detection effectiveness, and creating real-time monitoring dashboards that provided actionable insights to both technical teams and business stakeholders.

- Business impact: The evaluation revealed specific transaction types where the AI system underperformed, allowing for targeted improvements that balanced security and customer experience. The organization gained confidence in their fraud detection capabilities while reducing customer friction during legitimate transactions.

- Tech stack: TensorFlow, PyTorch, Weights & Biases, MLflow, custom evaluation frameworks, React, Java, LangChain, PostgreSQL, Apache Kafka, OpenAI, Elasticsearch, AWS, Docker and Kubernetes.

Healthcare

- Problem: A healthcare provider deployed an AI system for clinical decision support but lacked visibility into how clinicians were using the recommendations and whether patient outcomes improved. The organization needed to understand the correlation between AI suggestions and clinical effectiveness while ensuring regulatory compliance.

- Solution: We developed a multi-faceted evaluation approach that combined technical performance metrics with clinician feedback and patient outcome tracking. Our framework included creating specialized evaluation protocols for clinical environments, establishing feedback loops with medical professionals, and developing compliance-ready reporting systems that met healthcare regulatory requirements.

- Business impact: The evaluation identified specific clinical scenarios where AI recommendations were most valuable, allowing for focused improvement and training. Healthcare professionals gained confidence in the AI system while maintaining clinical autonomy and improving patient care quality.

- Tech stack: PyTorch, FHIR integration, custom healthcare metrics, clinical validation frameworks, Angular, .NET, Semantic Kernel, MongoDB, Microsoft Fabric, Azure OpenAI, Azure AI Search, Azure, AKS (Azure Kubernetes Service).

Manufacturing

- Problem: A manufacturer implemented AI for predictive maintenance but could not determine if maintenance costs were actually being reduced or if critical failures were being prevented. The organization needed clear visibility into the complete value chain from AI predictions to maintenance actions and outcomes.

- Solution: We created an evaluation framework that connected AI predictions to maintenance actions and outcomes, providing visibility into the complete value chain. Our approach included developing real-time monitoring systems, establishing correlation tracking between predictions and maintenance events, and creating comprehensive reporting mechanisms that demonstrated value to operations teams.

- Business impact: The evaluation revealed that certain equipment types showed significant maintenance cost reduction while others did not, enabling targeted refinement. The organization optimized their maintenance strategy based on data-driven insights rather than assumptions.

- Tech stack: TensorFlow, time-series analysis tools, industrial IoT integration, custom evaluation metrics, Java, SQL Server, Apache Spark, AI Agents, Apache SOLR, GCP and Microservices.

Retail

- Problem: A retailer deployed AI-powered product recommendations but could not determine which recommendation algorithms actually increased customer lifetime value versus just shifting purchases. The organization needed to understand the long-term impact of AI recommendations on customer behavior and business outcomes.

- Solution: We implemented an evaluation system that tracked not just immediate conversion but long-term customer behavior changes resulting from AI recommendations. Our framework included developing customer journey analytics, establishing control groups for comparison, and creating comprehensive measurement systems that connected AI interactions to business metrics.

- Business impact: The evaluation identified specific product categories where recommendations significantly influenced customer loyalty and spending patterns. The organization gained insights into which AI approaches delivered genuine business value versus surface-level engagement metrics.

- Tech stack: PyTorch, A/B testing frameworks, customer analytics platforms, recommendation evaluation metrics, React Native, .NET, Autogen, Azure Cosmos DB, Databricks, MCP, Elasticsearch, Snowflake, DevOps, Microservices, Terraform, Docker and Kubernetes.

Insurance

- Problem: An insurance company implemented AI for claims processing but lacked visibility into whether the system was making consistent decisions aligned with company policies. The organization needed assurance that AI decisions met regulatory requirements while maintaining processing efficiency.

- Solution: We developed an evaluation framework that assessed both technical accuracy and business policy alignment, with special attention to edge cases and regulatory compliance. Our approach included creating policy alignment verification systems, establishing audit trails for AI decisions, and developing comprehensive testing protocols that covered diverse claim scenarios.

- Business impact: The evaluation uncovered specific claim scenarios where the AI system made inconsistent decisions, allowing for targeted improvement. The organization achieved greater confidence in their automated claims processing while maintaining regulatory compliance and customer satisfaction.

- Tech stack: TensorFlow, NLP frameworks, document processing tools, compliance validation systems, ASP.NET Core, .NET, Semantic Kernel, Cassandra, Apache Kafka, Voice AI, Azure AI Search, Azure, Docker.

From Evalution to Action

Evaluation without action creates reports that gather dust. The true value of AI evaluation comes from translating insights into systematic improvements that strengthen your AI systems.

We help organizations establish action-oriented evaluation cycles that automatically trigger improvement processes when performance thresholds are not met. This systematic approach transforms assessment results into operational improvements through continuous feedback loops.

Your action framework should include:

- Prioritization system that ranks findings by business impact, resource requirements, and strategic alignment

- Clear ownership with specific leaders accountable for each improvement initiative

- Resource allocation ensuring teams have what they need rather than treating improvements as side projects

- Follow-up evaluation to verify that changes actually address identified issues

- Knowledge sharing mechanisms that spread insights across teams to prevent recurring problems

By establishing this approach, you transform evaluation from a compliance exercise into a continuous improvement engine that drives real operational value.

Essential Questions for AI Evaluation

As a senior leader, the questions you ask drive how seriously your teams take AI evaluation. These strategic questions help you assess whether your teams are building robust evaluation practices that prevent issues from becoming business problems.

- How do our evaluation metrics connect to strategic business objectives?

- What metrics provide the clearest indication of AI system effectiveness in our context?

- What unexpected behaviors or edge cases has our evaluation process uncovered?

- How quickly can we identify and address performance issues when they arise?

- How are we evaluating fairness and bias in our AI systems?

- What competitive benchmarking have we done to understand our AI performance?

- How do we incorporate user feedback into our evaluation process?

- What governance mechanisms ensure evaluation findings lead to improvements?

- How do we evaluate AI systems throughout their lifecycle, not just at deployment?

- What regulatory requirements are addressed in our evaluation framework?

- How effectively can we explain AI performance to non-technical stakeholders?

- How will our evaluation framework adapt as AI technologies and business needs evolve?

Regular use of these questions in your leadership reviews signals that evaluation is a strategic priority and helps build the discipline needed for successful AI deployment at scale.

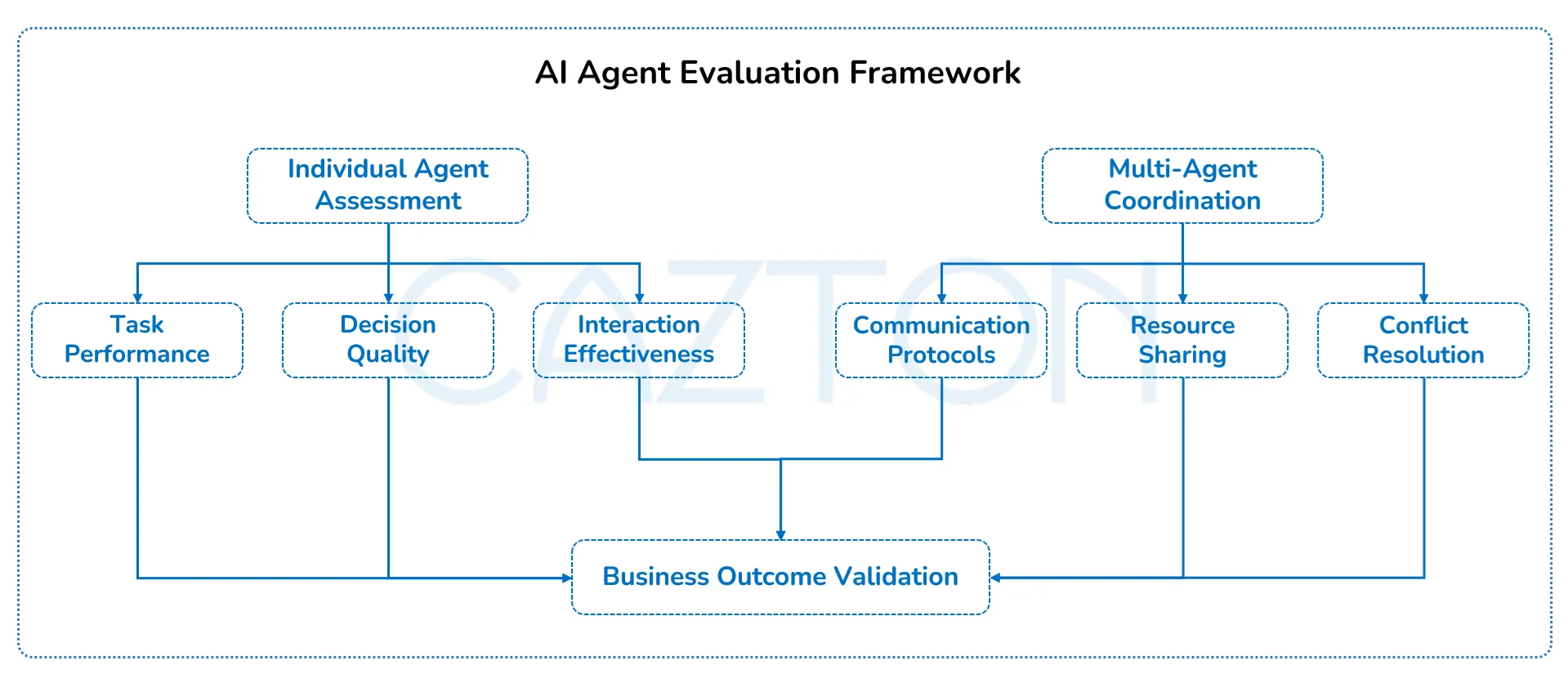

How You Can Evaluate and Trust Your AI Agent

AI agents require specialized evaluation approaches that assess both individual task performance and overall system behavior. Unlike traditional AI models that process specific inputs and generate outputs, agents operate autonomously, make decisions, and interact with multiple systems and users over time. Your evaluation framework should examine agent decision-making processes, interaction quality, adaptation capabilities, and long-term behavioral patterns to ensure consistent alignment with business objectives.

We develop comprehensive agent evaluation protocols that test performance across diverse scenarios, ensuring your AI agents maintain effectiveness as they encounter new situations and requirements. Our methodologies address the unique challenges of autonomous systems, including unpredictable interactions, emergent behaviors, and the need for ongoing trust verification in dynamic environments.

Multi-Agent System Evaluation

When multiple AI agents work together, evaluation complexity increases exponentially. Multi-agent systems require assessment of individual agent performance, inter-agent communication effectiveness, collaborative task completion, and emergent system behaviors that arise from agent interactions.

Our evaluation frameworks for multi-agent systems examine coordination protocols, resource sharing efficiency, conflict resolution mechanisms, and collective decision-making processes. We establish monitoring systems that track how agents negotiate, delegate tasks, and maintain system-wide objectives while managing competing priorities and resource constraints.

Third-Party Agent Integration

Enterprise environments often require your AI agents to interact with external systems and third-party agents developed by other organizations. These interactions introduce additional evaluation challenges, including protocol compatibility, trust verification, and performance consistency across different agent architectures.

Our evaluation frameworks address these complexities by establishing standardized communication protocols, implementing security verification systems, and creating monitoring capabilities that track cross-platform interactions. We help you evaluate how your agents maintain performance and security standards when collaborating with external systems, ensuring that third-party integrations enhance rather than compromise your AI ecosystem's effectiveness and reliability.

Key considerations for evaluating AI agents include:

- Alignment evaluation:

- Implementation: Reinforcement Learning from Human Feedback (RLHF) with custom reward models

- Metrics: KL divergence from reference policy, reward model confidence

- Boundary testing:

- Implementation: Adversarial prompt generation using genetic algorithms

- Tooling: Custom fuzzing frameworks that systematically explore edge cases

- Interaction quality:

- Implementation: Automated conversation analysis using discourse structure parsing

- Metrics: Explanation completeness score, clarification request frequency

- Failure modes:

- Implementation: Chaos engineering for LLMs with targeted prompt injections

- Monitoring: Anomaly detection on embedding space distributions

- Control mechanisms:

- Implementation: Constitutional AI techniques with runtime guardrails

- Verification: Formal verification of safety properties where possible

- Performance monitoring:

- Implementation: Distributed tracing across the agent execution path

- Dashboarding: Real-time latency and throughput metrics with alerting

- Integration compliance:

- Implementation: Automated security scanning of agent outputs

- Testing: Continuous integration tests for API contract adherence

Working with us provides access to evaluation expertise developed across multiple industries and use cases. You benefit from proven frameworks, best practices, and methodologies that have delivered measurable results for organizations facing similar challenges.

Our approach combines technical expertise with business acumen, ensuring that evaluation efforts directly support your strategic objectives while maintaining operational excellence.

What You Gain from Our AI Evaluation Services

We provide end-to-end AI evaluation services that connect technical performance to business outcomes. Working with us gives you access to proven frameworks and methodologies that have delivered measurable results for organizations facing similar AI evaluation challenges.

Our approach combines technical rigor with business insights, ensuring that evaluation efforts directly support your strategic objectives while maintaining operational excellence.

Our services include:

- Evaluation strategy that develops customized frameworks aligned with your business objectives and industry requirements.

- Implementation support for building evaluation pipelines that integrate with your existing AI development processes.

- Continuous monitoring systems that track AI performance throughout the system lifecycle.

- Regulatory compliance ensuring your AI systems meet current and emerging requirements through specialized evaluation protocols.

- Improvement roadmaps that translate evaluation insights into prioritized action plans with clear ownership and timelines.

Our team brings together PhD-level AI expertise with industry experience across multiple sectors, ensuring that your evaluation framework addresses both technical excellence and business relevance.

Preparing for the Future of AI Evaluation

AI evaluation is evolving rapidly as systems become more complex and autonomous. Your organization needs evaluation frameworks that adapt to emerging AI capabilities while maintaining reliable assessment standards.

Building future-ready evaluation systems requires infrastructure that can accommodate new AI technologies, always changing business requirements, and evolving regulatory landscapes. Organizations must establish evaluation frameworks that scale with their AI initiatives and adapt to emerging challenges.

Forward-thinking organizations are already preparing for emerging evaluation challenges:

- Multi-agent system evaluation as AI systems interact with each other, requiring assessment of emergent behaviors and system-level outcomes.

- Continuous learning evaluation for systems that adapt over time, tracking drift and ensuring ongoing alignment.

- Human-AI collaboration metrics that measure how effectively humans and AI work together beyond isolated system performance.

- Value alignment at scale verifying alignment with organizational values across thousands of automated decisions.

- Ecosystem impact assessment considering how AI systems affect broader business and social environments.

By anticipating these trends, you can build evaluation capabilities that address today's AI systems while preparing your organization for the next generation of AI technologies. We help organizations establish evaluation frameworks that scale with their AI initiatives and adapt to these emerging challenges.

Emerging Technical Evaluation Methodologies:

- Mechanistic interpretability: Going beyond feature importance to understand how models actually process information internally

- Implementation: Circuit analysis, activation engineering, and causal tracing

- Multi-objective evaluation: Balancing competing priorities through Pareto frontier analysis

- Implementation: Multi-objective optimization algorithms with custom trade-off visualizations

- Synthetic data evaluation: Creating controlled test environments with programmatically generated data

- Implementation: Generative models that produce edge cases and counterfactuals

- Evaluation-as-Code: Versioned, reproducible evaluation protocols that evolve alongside models

- Implementation: Declarative evaluation specifications with GitOps workflows

- Collective intelligence evaluation: Measuring how effectively AI systems augment human capabilities

- Implementation: Human-in-the-loop evaluation frameworks with structured feedback mechanisms

Our research team continually incorporates these emerging methodologies into our evaluation frameworks, ensuring your organization stays at the forefront of AI assessment capabilities.

How Cazton Transforms Your AI Evaluation Strategy

Many organizations approach AI evaluation as a technical checkbox rather than a strategic business function. This limited view creates significant blind spots:

- Focusing exclusively on accuracy metrics while ignoring business impact

- Conducting one-time evaluations rather than continuous monitoring

- Failing to connect evaluation insights to improvement processes

- Treating evaluation as an engineering concern rather than a business priority

- Missing regulatory and ethical dimensions of AI performance

For engineering teams, our approach provides:

- Reproducible evaluation protocols that eliminate subjective assessments

- Integration with CI/CD pipelines for automated quality gates

- Custom evaluation harnesses that simulate production environments

- Comprehensive test suites that cover both functional and non-functional requirements

- Detailed technical documentation of evaluation methodologies and implementation patterns

This technical precision ensures that your AI systems meet both engineering excellence standards and business objectives. These enhancements would significantly increase the technical depth and appeal to engineers while maintaining the business-focused structure of the original article.

Our team brings deep expertise in AI evaluation across industries, combining technical knowledge with business understanding to create assessment frameworks that drive real value. We partner with your organization to design, implement, and refine evaluation systems that provide the insights you need to make confident decisions about AI investments.

We help you avoid these pitfalls by implementing a comprehensive evaluation strategy that connects technical performance to business outcomes. Our collaborative approach ensures that evaluation frameworks reflect your specific requirements while incorporating industry best practices, transforming AI from a technology experiment into a reliable business asset that delivers consistent, measurable value.

Contact us today to learn how our AI evaluation expertise can help your organization maximize the return on AI investments while minimizing associated risks.

Cazton is composed of technical professionals with expertise gained all over the world and in all fields of the tech industry and we put this expertise to work for you. We serve all industries, including banking, finance, legal services, life sciences & healthcare, technology, media, and the public sector. Check out some of our services:

- Artificial Intelligence

- Big Data

- Web Development

- Mobile Development

- Desktop Development

- API Development

- Database Development

- Cloud

- DevOps

- Enterprise Search

- Blockchain

- Enterprise Architecture

Cazton has expanded into a global company, servicing clients not only across the United States, but in Oslo, Norway; Stockholm, Sweden; London, England; Berlin, Germany; Frankfurt, Germany; Paris, France; Amsterdam, Netherlands; Brussels, Belgium; Rome, Italy; Sydney, Melbourne, Australia; Quebec City, Toronto Vancouver, Montreal, Ottawa, Calgary, Edmonton, Victoria, and Winnipeg as well. In the United States, we provide our consulting and training services across various cities like Austin, Dallas, Houston, New York, New Jersey, Irvine, Los Angeles, Denver, Boulder, Charlotte, Atlanta, Orlando, Miami, San Antonio, San Diego, San Francisco, San Jose, Stamford and others. Contact us today to learn more about what our experts can do for you.